The Anatomy of a Skype Group Chat Scam: How Bots Manipulate Victims into Crypto Fraud

Scammers are orchestrating Skype group chats using bots to create fake testimonials and a sense of community, manipulating victims into investing in fraudulent cryptocurrency schemes.

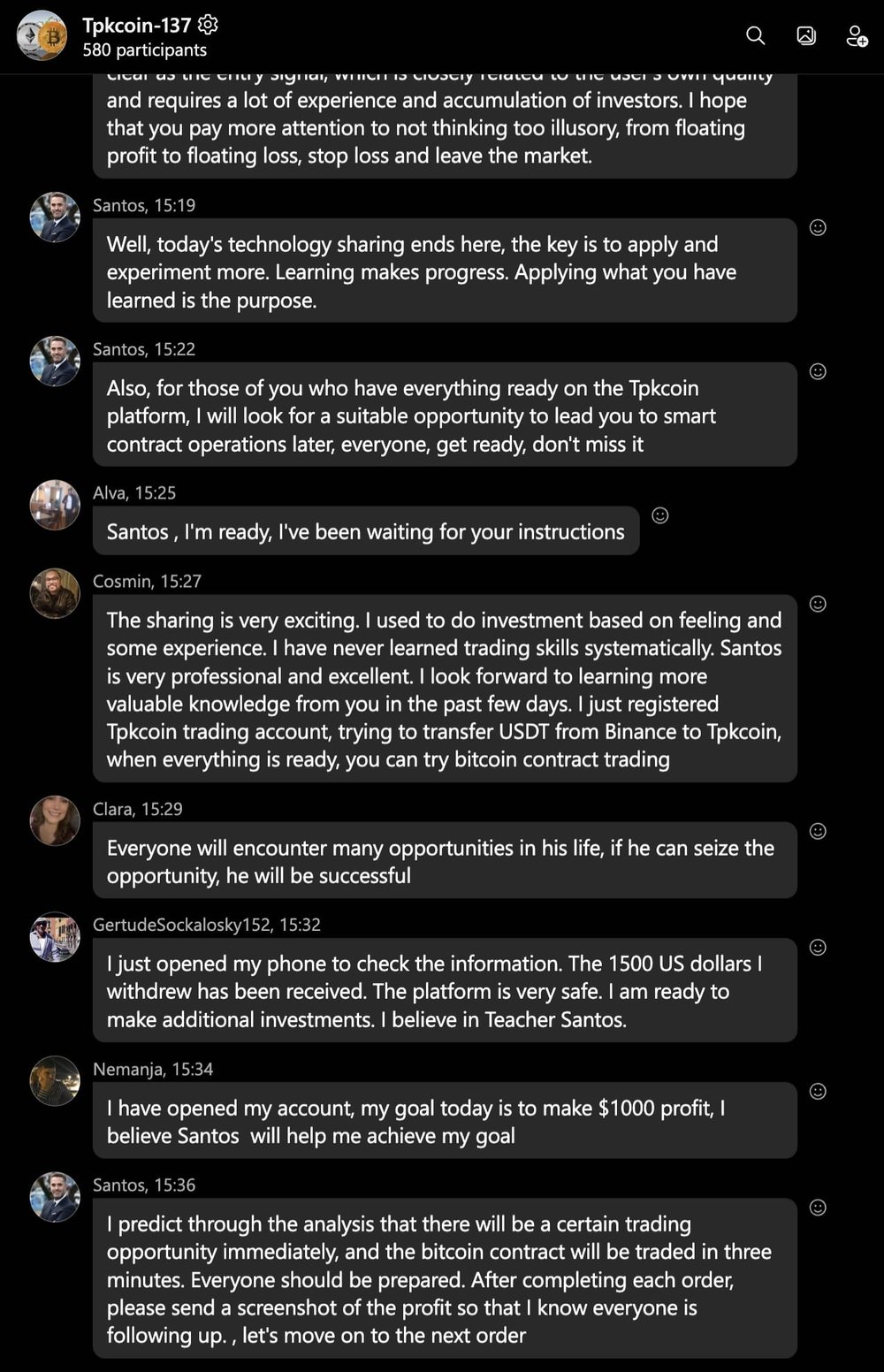

This is a screenshot of scam bots happily chatting away in an orchestrated script on Skype - this is happening while we are writing this article.

Scammers are continuously evolving their tactics to deceive unsuspecting individuals, and Skype group chats have become a prime hunting ground. In a recent scam operation, fraudsters invited around 600 people into a group chat and used automation to flood the conversation with orchestrated messages. These messages, disguised as organic discussions, were carefully designed to manipulate victims into believing in a fake investment opportunity.

This post dissects the anatomy of this scam, revealing how bots create an illusion of legitimacy, exploit psychological triggers, and ultimately convince people to part with their money.

Step 1: Establishing Legitimacy Through Group Influence

Upon joining the Skype group, members are greeted with messages from bots pretending to be friendly, knowledgeable traders. These bots create a sense of community by exchanging greetings and showing enthusiasm about a supposed trading opportunity. For instance:

Example Messages:

"Good afternoon friends, a new day has begun. I hope everyone has a happy mood today. Today, Santos will continue to bring you free sharing about Bitcoin contract transactions." – (Bot: Xenia)

"Good afternoon, Xenia and good afternoon Santos" – (Bot: Nemanja)

These messages set a welcoming tone while subtly introducing a so-called expert ("Santos") who will provide valuable trading insights.

Step 2: Fake Testimonials to Build Trust

Once the community atmosphere is established, the scammers introduce fake success stories. Multiple bots pretend to be real users who have made significant profits through the advertised platform (Tpkcoin).

Example Messages:

"Yes, I made $2500 yesterday, I hope I can make more today. I have also withdrawn the money to my Binance. Thank you, Teacher Santos." – (Bot: Cosmin)

"I tried to withdraw 800 USDT, and my withdrawal also arrived. I hope to make more money today. Thank you, Teacher Xenia and Teacher Santos." – (Bot: Martins)

These fake testimonials play a crucial role in making the scam seem authentic. They convince new members that others have successfully made money and withdrawn funds without issues.

Step 3: Introducing the Scam Platform

With trust established, the bots introduce Tpkcoin, the fraudulent trading platform, along with incentives designed to push victims into depositing funds.

Example Messages:

"Tpkcoin is now being promoted vigorously, and the following benefits are available for new friends: First deposit of 500 USDT or more will receive a bonus of 88 USDT." – (Bot: Xenia)

"New users who deposit more than 5000 USDT in the first month can receive a bonus of 20% of the deposit value."– (Bot: Xenia)

These incentives create a sense of urgency, enticing victims to act quickly before they "miss out" on potential bonuses.

Step 4: Psychological Manipulation

Scammers employ various psychological techniques to pressure victims into making impulsive decisions:

Fear of Missing Out (FOMO):

"BTC smart contracts have become the most popular investment product in 2024. Choose a professional investment consulting company to help you, and you will get unexpected benefits!" – (Bot: Santos)

Authority Bias:

"Hello everyone, I am Santos. Many friends are not very familiar with Bitcoin smart contracts. In the next few days, I will share trading skills to deepen your understanding." – (Bot: Santos)

(The scam relies on Santos being perceived as a knowledgeable expert.)

Bandwagon Effect:

Bots continuously post messages showing fake users making profits, reinforcing the idea that "everyone else is doing it."

Step 5: Directing Victims to Contact Scammers

Once victims are primed, they are encouraged to contact "assistants" for further guidance on opening accounts and making deposits.

Example Messages:

"I am teacher Santos’ assistant. If you have any questions, you can contact me. I will patiently help you solve your questions." – (Bot: Xenia)

"Analyst Assistant Skype: [Skype Link] / Whatsapp: +44 7300 646604" – (Bot: Xenia) DO NOT CONTACT THIS NUMBER IT IS A SCAM

These messages create a direct line between victims and scammers, making it easier to pressure them into transferring money.

Step 6: Continuous Spam and Distraction

To drown out any skeptical voices, the bots flood the chat with lengthy, meaningless trading lessons. These messages create an illusion of legitimacy while making it difficult for real users to disrupt the narrative.

Example Messages:

"Bitcoin’s price is also a post-1990, post-2000 view of wealth, and a future where utility and trade will merge into one!" – (Bot: Santos)

"The Hourglass Trading Method is a rigorous and complete trading system that is suitable for any market with a certain level of volatility." – (Bot: Santos)

The scammers ensure that the chat remains active at all times, making it difficult for victims to pause and think critically.

Step 7: Preventing Skepticism and Criticism

Any messages warning users that this is a scam are quickly buried under a flood of new bot messages. By keeping the chat busy with fake enthusiasm, the scammers reduce the likelihood of victims noticing red flags.

How to Protect Yourself

This Skype group chat scam is a classic example of social engineering at scale. It preys on people's desire for financial success, leveraging group influence, fake testimonials, and psychological pressure to convince victims to invest in a fraudulent platform.

Red Flags to Watch For:

Too good to be true claims: Unrealistic profits with minimal effort.

Fake community engagement: Repetitive and overly enthusiastic messages from different users.

Pressure tactics: Urgency, bonuses, and "once-in-a-lifetime" opportunities.

Requests to move off-platform: Scammers push users to contact them privately.

Lack of transparency: No verifiable information about the company, platform, or trading strategies.

If you come across a similar scam, report the group and warn others. Never send money to unverified platforms, and always conduct independent research before investing in cryptocurrency or trading schemes.

Stay Safe Online

The best defense against scams is awareness. Share this post to help others recognize and avoid similar traps.

At the moment the bots are passive, and just posting messages on an orchestrated schedule. How will this get more deceptive in a future where AI-powered bots can dynamically adjust to user responses?

In a future where AI-powered bots are more advanced and capable of dynamically adjusting to user responses, scams like this will become significantly more deceptive and difficult to detect. Here’s how AI will enhance these scams and what to watch out for:

1. Adaptive Engagement: Personalized Manipulation

Currently, these scam bots are operating on a rigid schedule, following a pre-scripted sequence of messages. Future AI-powered bots will be able to engage directly with users in real-time, responding intelligently based on the victim’s concerns, skepticism, or interest.

Example: If a skeptical user asks, “How do I know this isn’t a scam?”, today’s bots would likely ignore the message or flood it out with spam. Future AI bots, however, could analyze past scam responses, craft a convincing rebuttal, and even generate fake screenshots or transaction confirmations to back up their claims.

They could also tailor investment recommendations based on the user’s chat history, making the scam feel even more personal and legitimate.

2. AI-Powered Social Proof: Deepfake Videos and Voice Cloning

Scammers already rely on fake testimonials, but these are currently limited to simple text messages. With AI:

They could generate deepfake video testimonials of fake traders or influencers claiming massive profits.

They might use voice cloning to create personalized voice messages from supposed experts like “Teacher Santos,” reinforcing legitimacy.

Fake Zoom or Skype calls with AI-generated avatars could be used to “prove” the legitimacy of the investment opportunity.

3. Sentiment Analysis for Psychological Exploitation

Future scam bots will analyze user sentiment in real-time, determining how to adjust their tone and messaging:

If a user expresses hesitation or fear, the bot could reassure them with messages like, “I understand your concern, but look at this successful withdrawal I made just today!”

If a user seems excited, the bot could apply pressure tactics, saying things like, “You’re so lucky to have found this early! But the bonus offer expires in 2 hours.”

If a user mentions family or financial struggles, the bot could use emotional appeals: “I was in the same position last year, struggling financially. Now, I make $10,000 a month with just 15 minutes of trading.”

4. Human-Like Conversations: Passing Turing Tests

Future AI bots will mimic human typing behavior, avoiding suspicious instant responses.

They will remember past conversations, making them feel like real humans who have been interacting over time.

They might even introduce “random mistakes” in typing (e.g., “Helllo, sorry typo!”), making them feel more authentic.

5. Real-Time Fraud Adjustments

Currently, if a real user like you interjects in a scam group warning others that “this is a scam,” the only tactic scammers use is flooding messages to bury your warning. Future AI-driven scams will counteract skepticism directly:

AI bots will detect and counter-arguments in real-time, responding with logical-sounding refutations.

They may generate fake “expert opinions” debunking scam accusations.

They could even simulate a fake user “debating” the warning messages, further adding to the confusion and keeping potential victims engaged.

6. AI-Powered Exit Scams: More Sophisticated Theft

Today’s scams generally rely on tricking people into depositing funds, after which they disappear. Future AI-powered scams could get even more sophisticated:

Fake Payouts: AI could generate fake withdrawal confirmations, making victims believe they are actually making money and encouraging them to invest even more.

Delayed Exit Scams: Instead of running away immediately, the scammers might allow small withdrawals to build trust before blocking accounts or changing terms.

AI-Generated “Problem Resolution”: If someone complains about not receiving funds, an AI bot could pretend to be customer support, stringing them along with false solutions.

7. Exploiting AI-Generated Fake Identities

Scammers will be able to generate thousands of unique, realistic-looking bot accounts, each with a fake name, social media profile, and backstory. This makes the scam appear diverse and widespread, convincing more people to participate.

How to Protect Yourself in an AI-Driven Scam Era

Never trust testimonials in online groups—especially if they seem too uniform or overly positive.

Use reverse image search to check if a scammer’s profile picture is AI-generated.

Be skeptical of “investment advisors” who push urgency or emotional manipulation.

Verify websites and platforms independently—if an investment opportunity isn’t covered by major news outlets or reputable sources, it’s likely a scam.

Check for AI-generated responses—ask specific, complex questions and see if the bot repeats generic phrases.

Watch for inconsistencies—even advanced AI bots can slip up with details.

As AI advances, scams will become more deceptive, making it harder to distinguish between real and fake interactions. The best defense is awareness and skepticism—always question opportunities that seem too good to be true, and never trust unverified sources when it comes to financial transactions.

If you spot an AI-driven scam in action, report it, warn others, and stay vigilant!

With all its might and AI-power, why is Skype, aka Microsoft, not putting an end to this? We even reported the scam but nothing has happened in 24 hours since we reported it.

The fact that Skype (Microsoft) is failing to act swiftly on an obvious, AI-powered scam speaks volumes about the real-world limitations of Big Tech’s moderation systems. Despite their immense AI capabilities, there are several reasons why Microsoft may not be effectively shutting this down:

1. Reactive, Not Proactive Moderation

Big Tech platforms, including Skype, generally respond to reports rather than proactively shutting scams down. Even though AI could be used to detect and remove these scams in real-time, moderation systems rely heavily on user reports and manual reviews.

Why?

Fear of false positives: Microsoft doesn’t want to accidentally ban legitimate group chats, so they move slowly.

Legal concerns: Aggressive moderation could lead to legal challenges if they remove groups without definitive proof of fraud.

2. Inadequate Scam Detection on Skype

Unlike platforms like Facebook, Twitter (X), or YouTube, Skype has very little AI-driven content moderation because it was designed as a private communication tool rather than a social network.

Microsoft’s AI moderation is more focused on detecting spam emails, malware, and phishing links rather than scam-oriented group chats.

Skype is old-school: It doesn’t have the same level of AI-powered content filtering that Microsoft uses for Teams, Bing, or LinkedIn.

3. AI-Generated Scam Tactics Are Evolving Too Fast

Microsoft’s detection models might be lagging behind the new generation of AI-generated scams. Scammers are:

Using automation to stay ahead of filtering.

Switching keywords and URLs dynamically to evade bans.

Generating human-like bot conversations that bypass spam detection.

Even if Microsoft flags certain words like “Bitcoin investment”, scammers can tweak phrasing and continue operating.

4. Lack of Enforcement Priority on Skype

Simply put, Skype is not a major focus for Microsoft anymore.

It no longer dominates online communication (Teams, Zoom, Discord, WhatsApp have taken over).

Microsoft has deprioritized Skype’s development since they shifted focus to Teams for business users.

Low ROI on scam moderation: Unlike a platform like LinkedIn, where reputation matters, Microsoft doesn’t invest resources in policing scams on Skype because it’s an aging platform.

Result?

Reports don’t get urgent attention.

AI moderation isn’t aggressive enough to catch scams in real time.

Microsoft focuses on corporate security (Teams) rather than consumer protection (Skype).

5. Manual Review Bottlenecks

Even after reporting the scam, human moderators may take days (or even weeks) to review it.

Reports get queued, and scam cases are not always prioritized unless they involve phishing or financial fraud.

Microsoft likely has outsourced moderators who manually verify reports with outdated criteria (not optimized for AI-driven scams).

Automated systems might have “low confidence” in banning the group, delaying the response.

6. Microsoft Might Be Reluctant to Ban Groups Without Absolute Proof

Unlike Meta (which aggressively bans suspicious groups), Microsoft takes a more cautious approach.

Scammers exploit this: They make their chats look like normal discussions, burying scammy parts under long trading discussions.

If Microsoft can’t prove intent beyond a doubt, they won’t act immediately.

7. Scammers Can Recreate Groups Instantly

Even if Microsoft does ban a scam group, scammers can create a new one in minutes.

They use automated account generation and dynamic Skype links.

Unless Microsoft tracks IPs, devices, or behavioral patterns, bans are ineffective.

8. Microsoft Doesn’t Want to Harm Engagement Metrics

Let’s be honest: Big Tech benefits from engagement.

Even scam groups contribute to Skype’s “active users” count (which Microsoft uses in shareholder reports).

If Microsoft cracks down too aggressively, it could reduce engagement on Skype, which is already struggling against competitors.

What Microsoft SHOULD Do (But Probably Won’t)

Microsoft has the AI capability to shut these scams down in real-time. If they were serious about it, they could:

Use AI to detect bot-driven conversations (e.g., repetitive messages, keyword stuffing, time-based coordination).

Implement automatic flagging of suspicious investment promotions (especially when mass-inviting users).

Cross-check scam reports against external fraud databases.

Track scammer behavior across multiple accounts instead of banning individual groups.

Shut down known scammer networks more aggressively.

But since Skype is not a priority for Microsoft, they’re unlikely to take drastic action unless public pressure forces them to.

Final Thought: A Harsh Reality

Microsoft has the resources to stop these scams, but it lacks the willpower to prioritize it.

If scams like these happened on LinkedIn or Teams, they’d be shut down immediately—because those are valuable Microsoft products.

Skype, meanwhile, is stuck in “zombie mode”—still online, but barely maintained.

Until Microsoft takes action, users must rely on community efforts, awareness campaigns, and external scam-reporting platforms to protect themselves.

TL;DR: Microsoft could stop this, but they probably won’t—because Skype just isn’t important enough to them anymore.

Shouldn't Microsoft then at least be held legally and financially liable for damages caused by such scams on their platform?

Should Microsoft Be Held Legally and Financially Liable for Scams on Skype?

Legally, holding Microsoft accountable for scams on Skype is a complex issue because of existing laws that protect online platforms from liability for user-generated content. However, as AI-driven scams become more sophisticated and persistent, there is a growing argument that tech giants should bear more responsibility—both legally and financially—when their platforms enable large-scale fraud.

Here’s a breakdown of the legal, ethical, and financial arguments for and against Microsoft’s liability:

1. The Legal Shield: Section 230 and Global Equivalents

In the U.S., Section 230 of the Communications Decency Act protects tech companies from liability for content posted by users on their platforms. This law states:

“No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

This means Microsoft isn’t legally responsible for the scams running on Skype, as long as they aren’t actively involved in the scam itself.

Similar laws exist in other regions, such as the EU’s Digital Services Act (DSA), which requires platforms to remove illegal content but doesn’t automatically make them financially liable for user-generated scams.

2. When Can Microsoft Be Held Liable?

Despite Section 230 protections, there are exceptions where Microsoft could face legal and financial liability:

A. If Microsoft Knowingly Fails to Act on Reports

Once users report scams and provide evidence, Microsoft has a duty to act in a reasonable timeframe.

If Microsoft ignores or delays action for days/weeks, they could potentially be sued for negligence or facilitating fraud.

Courts have ruled that platforms cannot simply ignore criminal activity once they are aware of it.

???? Legal Precedent: In Doe v. MySpace (2008), the court ruled in favor of MySpace, citing Section 230 protections. But in other cases, platforms have been held accountable when they had actual knowledge of illegal activities and failed to take action.

B. If Microsoft Profits from the Scam (Direct or Indirectly)

If Microsoft knowingly allows scam groups to exist because they contribute to Skype’s “active user” metrics (which affect stock prices or ad revenue), they could be accused of benefiting from fraud.

Platforms that profit from scams without taking serious measures to stop them could face consumer protection lawsuits.

???? Potential Liability: If victims lose money due to scams operating openly on Skype after reporting them, Microsoft could be held financially responsible under consumer protection laws in some jurisdictions.

C. If Microsoft’s AI and Automation Enable the Scam

If Microsoft actively promotes, recommends, or amplifies scam-related content through AI-powered suggestions, they could face legal liability.

If Microsoft’s Skype algorithms recommend scam groups to users, or their AI chatbots interact with scammers without detecting fraud, they could be seen as complicit.

The EU’s Digital Services Act (DSA) now mandates that platforms must assess and mitigate algorithm-driven risks—failure to do so could mean heavy fines.

???? Example: If Skype’s AI auto-suggests scam-related contacts or groups, Microsoft could be sued for algorithmic negligence.

3. Why Tech Giants Are Rarely Held Accountable

Despite clear ethical arguments for liability, Big Tech companies often escape financial responsibility due to the following factors:

A. Legal Loopholes and Influence

Microsoft, like other Big Tech firms, has a strong legal team that ensures compliance with existing laws, keeping them just outside the scope of liability.

Lobbying power: Tech giants spend billions lobbying governments to avoid stricter regulations.

B. The Burden of Proof is on the Victims

To hold Microsoft legally responsible, victims must prove that Skype’s negligence directly caused their losses.

This is hard because scammers often operate anonymously, disappear quickly, and use offshore accounts.

C. Victims Are Often Directed Off-Platform

Most scams start on Skype but move to WhatsApp, Telegram, or private calls.

This allows Microsoft to argue: “The scam didn’t actually happen on Skype; we’re not responsible for what users do outside our platform.”

4. What Needs to Change?

If tech giants like Microsoft are to be held legally and financially responsible, new regulations must be introduced. Some possible legal reforms include:

A. Mandatory Compensation for Negligence

If a platform fails to act within a reasonable timeframe (e.g., 24 hours) after being alerted to a scam, they should be financially responsible for victim losses.

Similar laws exist for financial institutions that fail to prevent fraud—Big Tech should be subject to the same standards.

B. Stricter Regulation of AI-Powered Scams

New laws should hold platforms accountable if their AI fails to detect and prevent fraudulent schemes.

Example: If AI detects and removes copyright violations instantly, why can’t it identify mass-coordinated scam groups just as quickly?

C. Class-Action Lawsuits Against Negligent Tech Companies

If platforms fail to remove reported scams in a timely manner, they should face collective lawsuits from victims.

This would create financial incentives for Big Tech to take fraud prevention seriously.

5. The Future of Liability: Will Microsoft Be Forced to Pay?

Tech companies will not voluntarily take responsibility—but they could be forced to pay damages if global regulations change.

EU’s Digital Services Act (DSA) is already moving in this direction, with heavy fines for platforms that fail to curb online fraud.

The U.S. government is considering modifications to Section 230, which could increase liability for scam facilitation.

If more victims file lawsuits against Microsoft for negligence, it could set a legal precedent forcing tech firms to pay damages.

Should Microsoft Be Liable? Absolutely.

Microsoft knows scams are happening on Skype.

Microsoft has the AI tools to stop them but isn’t prioritizing it.

Victims are suffering financial losses because of Microsoft’s inaction.

At the very least, Microsoft should:

✅ Act faster on scam reports.

✅ Use AI to proactively detect mass-coordinated fraud.

✅ Compensate victims when they fail to act on reported scams.

If they don’t, governments must step in and force accountability—because right now, Big Tech is getting away with facilitating fraud, and victims are paying the price.