Study finds brain reacts differently to human and AI voices

A new study shows that while humans struggle to distinguish human and AI voices, our brains respond differently when we hear them. As AI voice cloning becomes more advanced, it raises ethical and safety concerns that humans weren’t exposed to before. Does the voice on the other end of the phone call belong to a human, or was it generated by AI? Do you think you’d be able to tell? Researchers from the Department of Psychology at the University of Oslo tested 43 people to see if they could distinguish human voices from those that were AI-generated. The participants were The post Study finds brain reacts differently to human and AI voices appeared first on DailyAI.

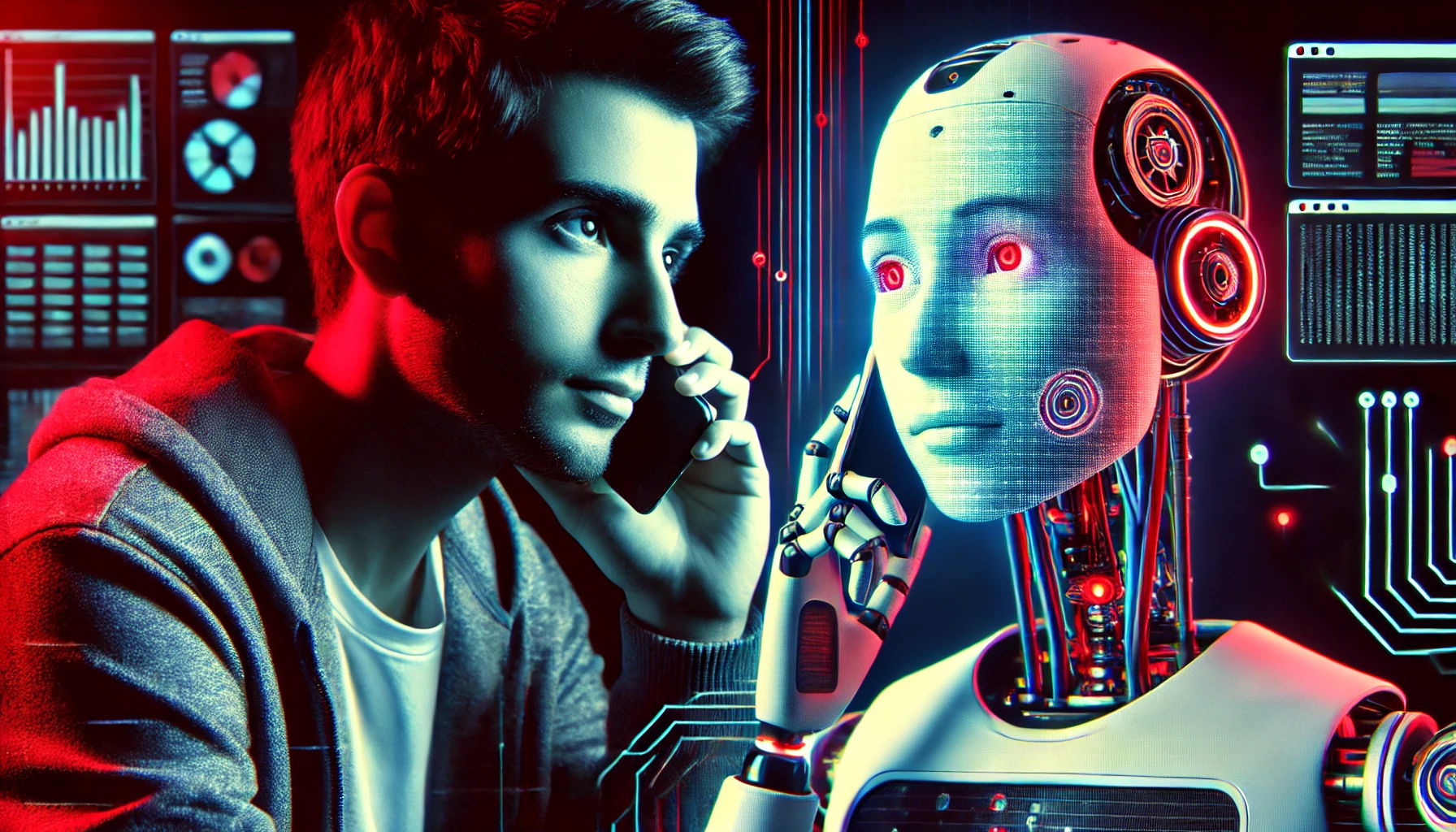

A new study shows that while humans struggle to distinguish human and AI voices, our brains respond differently when we hear them.

As AI voice cloning becomes more advanced, it raises ethical and safety concerns that humans weren’t exposed to before.

Does the voice on the other end of the phone call belong to a human, or was it generated by AI? Do you think you’d be able to tell?

Researchers from the Department of Psychology at the University of Oslo tested 43 people to see if they could distinguish human voices from those that were AI-generated.

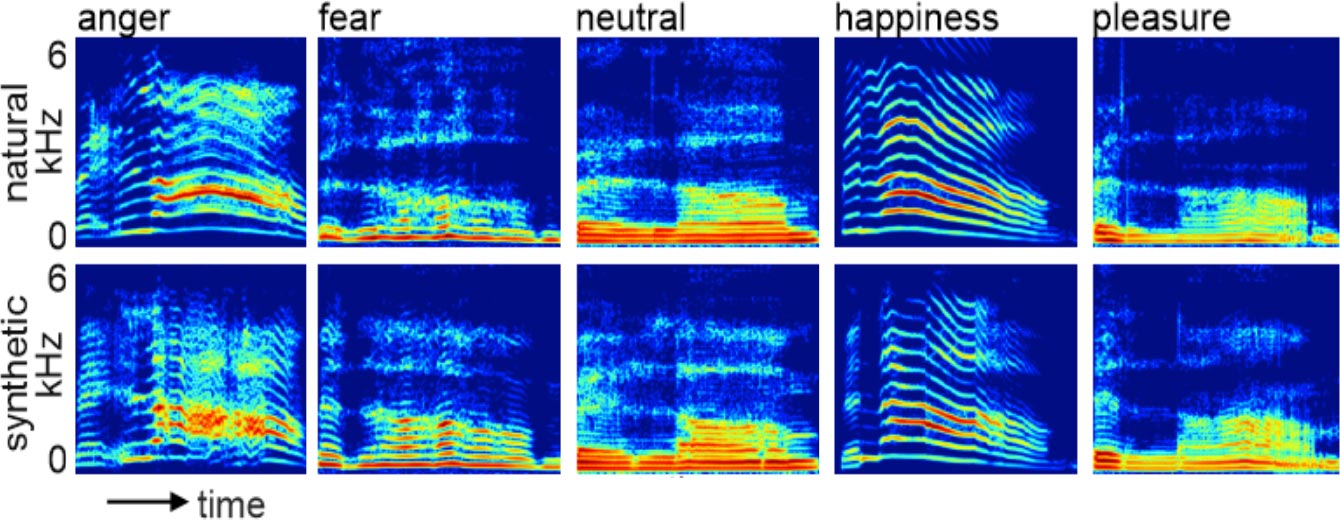

The participants were equally bad at correctly identifying human voices (56% accuracy) and AI-generated ones (50.5% accuracy).

The emotion of the voice affected how likely they could correctly identify it. Neutral AI voices were identified with 74.9% accuracy compared to only 23% accuracy for neutral human voices.

Happy human voices were correctly identified 77% of the time while happy AI voices were identified with a concerning low 34.5% accuracy.

So, if we hear an AI-generated voice that sounds happy we’re more likely to assume it’s human.

Even though we consciously struggle to identify an AI voice correctly, our brain seems to pick up on the differences on a subconscious level.

The researchers performed fMRI scans of the participants’ brains as they listened to the different voices. The scans revealed significant differences in brain activity in response to the AI and human voices.

The researchers noted, “AI voices activated the right anterior midcingulate cortex, right dorsolateral prefrontal cortex and left thalamus, which may indicate increased vigilance and cognitive regulation.

“In contrast, human voices elicited stronger responses in the right hippocampus as well as regions associated with emotional processing and empathy such as the right inferior frontal gyrus, anterior cingulate cortex and angular gyrus.”

We might find it difficult to know if a voice is AI-generated or human, but our brain seems able to tell the difference. It responds with heightened alertness to AI voices and a sense of relatedness when listening to a human voice.

The participants rated human voices as more natural, trustworthy, and authentic, especially the happy voices and pleasure expressions.

Doctoral researcher Christine Skjegstad who conducted the study along with Professor Sascha Frühholz said, “We already know that AI-generated voices have become so advanced that they are nearly indistinguishable from real human voices.

“It’s now possible to clone a person’s voice from just a few seconds of recording, and scammers have used this technology to mimic a loved one in distress and trick victims into transferring money.

“While machine learning experts have been developing technological solutions to detect AI voices, much less is known about the human brain’s response to these voices.”

This research indicates that our brain senses something isn’t quite right when it processes a synthetic voice and becomes more cautious.

We may need a bit more help than that as AI-generated voices become more ‘human’.

The post Study finds brain reacts differently to human and AI voices appeared first on DailyAI.