When the model hallucinates, and you didn't know

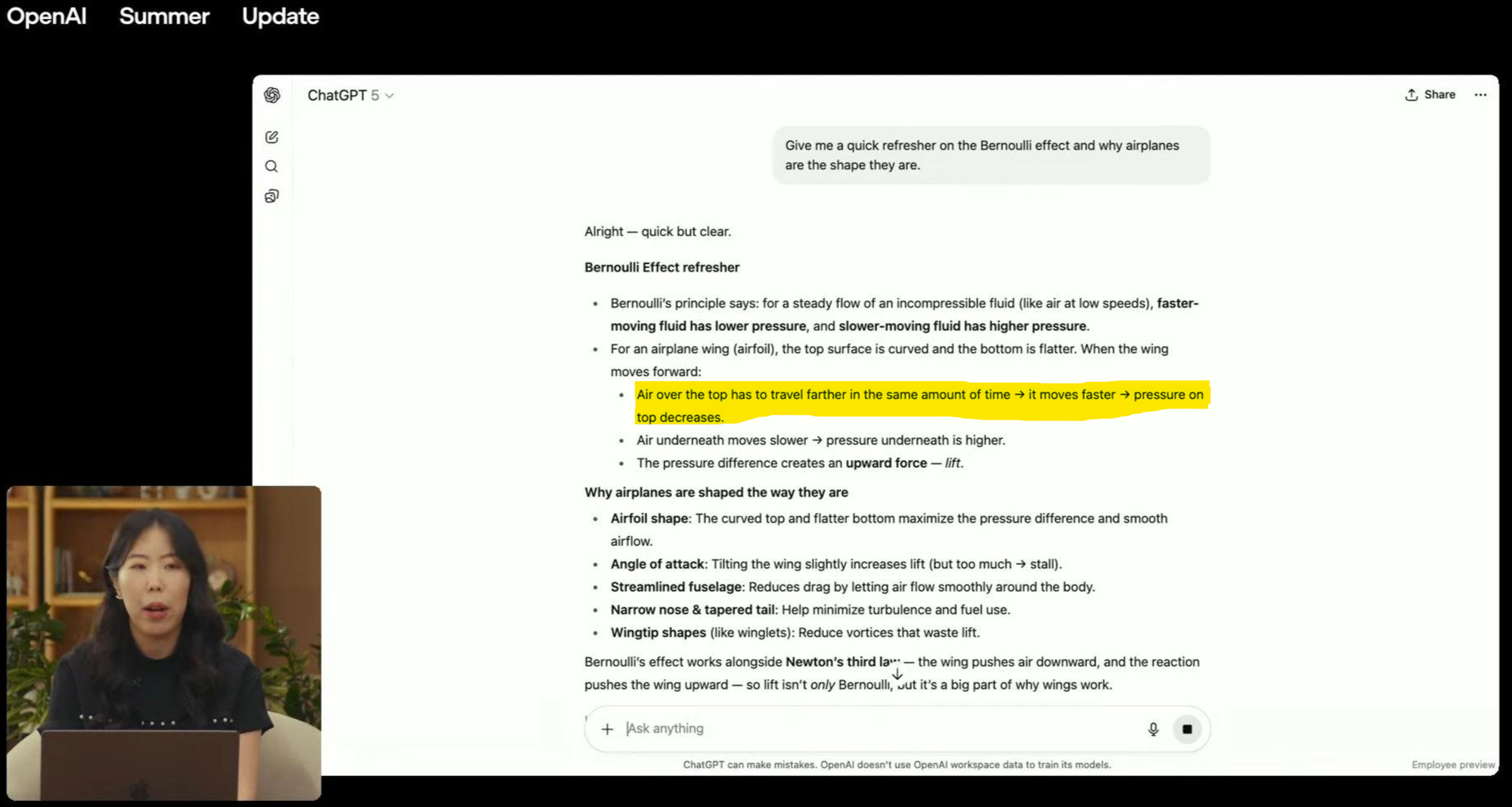

Models will hallucinate. Sometimes when you are demoing the model like what happened at the OpenAI demo for GPT-5.

One thing that's been consistent for the past few years of AI is model hallucination. It happens, and while there are ways to reduce hallucinations, sometimes it will happen in larger, more public things.

Like the OpenAI launch demo for GPT-5.

Question. If the model hallucinates, and neither the speaker nor the audience catches it, whose fault is it? And what if the presenters at the GPT-5 launch went further and built an interactive webpage to show the hallucination, without knowing it's a hallucination?

Before we answer that, here is the post on NASA explaining why the statement presented in the demo is wrong:

"The problem with the 'Equal Transit' theory is that it attempts to provide us with the velocity based on a non-physical assumption (the molecules meet at the aft end). The actual velocity over the top of an airfoil is much faster than that predicted by the 'Longer Path' theory and particles moving over the top arrive at the trailing edge before particles moving under the airfoil." (NASA, n.d.)

In the case of the launch party for GPT-5, the issue kind of falls on both ends. On one side, it's the model's fault because it didn't know that the source it was citing was wrong.

On the other, it's the presenter's who were at fault for not being able to fact check the output of the AI and call out if an error happens or not. Models will be getting more and more accurate as time moves on, but at the same time they will make mistakes. Which I think speaks more for reassuring people that they are still needed, even with cutting edge AI.

Learn more about AI

Stay updated with new articles about AI and prompting!

No spam. Unsubscribe anytime.

August 15th update: In another article, we talked about how models have issue fixing bugs in your code due to debugging decay, the issue being that the reason there is a decay in debugging (as in each time you try to fix something, it gets worse and worse) is due to context pollution.

Context pollution, where AI either made a bad assumption but never questions it, or there was an error made and you didn't catch it, means that as you go along in the chat, it'll keep getting referred back to.

In the above example, by telling the AI that the 'Equal Transit' theory is wrong would have fixed the context, and they could have created a better demo. Which means that by having someone on your team with domain knowledge, you can avoid more public facing mistakes like what happened at the GPT-5 launch party.

You can watch it for yourself here:

Sources

NASA. (n.d.). Incorrect theory of flight: Equal transit time. NASA Glenn Research Center. Retrieved August 15, 2025, from https://www.grc.nasa.gov/www/k-12/VirtualAero/BottleRocket/airplane/wrong1.html

OpenAI. (2025, August 7). Introducing GPT-5 [Video]. YouTube. https://www.youtube.com/watch?v=0Uu_VJeVVfo