Humanity’s Last Exam wants your tough questions to stump AI

Benchmarks are struggling to keep up with advancing AI model capabilities and the Humanity’s Last Exam project wants your help to fix this. The project is a collaboration between the Center for AI Safety (CAIS) and AI data company Scale AI. The project aims to measure how close we are to achieving expert-level AI systems, something existing benchmarks aren’t capable of. OpenAI and CAIS developed the popular MMLU (Massive Multitask Language Understanding) benchmark in 2021. Back then, CAIS says, “AI systems performed no better than random.” The impressive performance of OpenAI’s o1 model has “destroyed the most popular reasoning benchmarks,” The post Humanity’s Last Exam wants your tough questions to stump AI appeared first on DailyAI.

Benchmarks are struggling to keep up with advancing AI model capabilities and the Humanity’s Last Exam project wants your help to fix this.

The project is a collaboration between the Center for AI Safety (CAIS) and AI data company Scale AI. The project aims to measure how close we are to achieving expert-level AI systems, something existing benchmarks aren’t capable of.

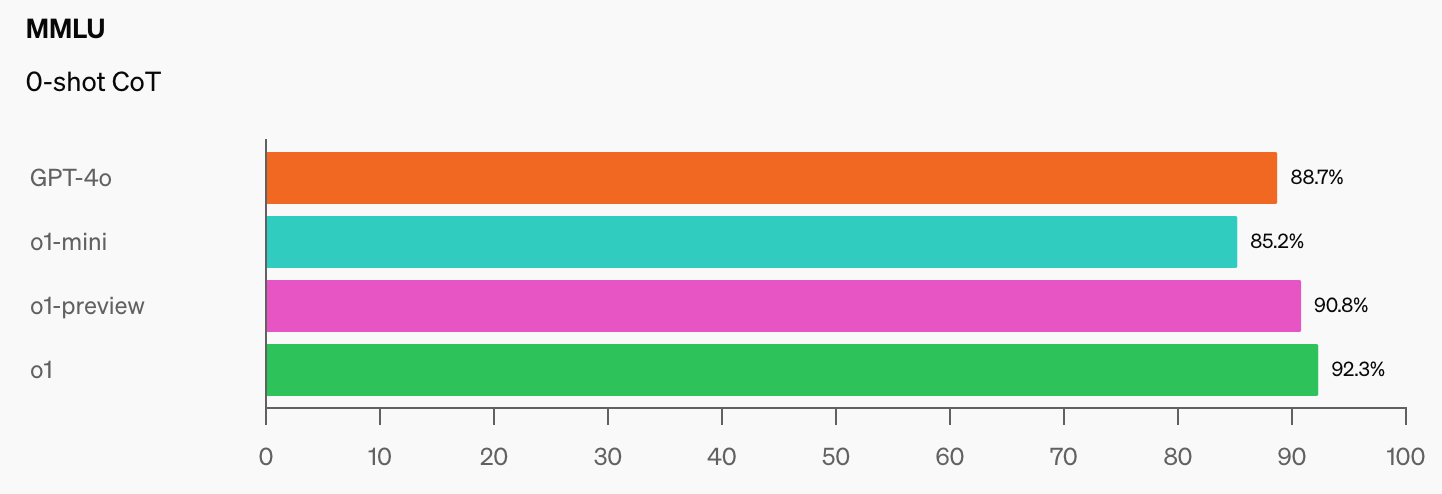

OpenAI and CAIS developed the popular MMLU (Massive Multitask Language Understanding) benchmark in 2021. Back then, CAIS says, “AI systems performed no better than random.”

The impressive performance of OpenAI’s o1 model has “destroyed the most popular reasoning benchmarks,” according to Dan Hendrycks, executive director of CAIS.

Once AI models hit 100% on the MMLU, how will we measure them? CAIS says “Existing tests now have become too easy and we can no longer track AI developments well, or how far they are from becoming expert-level.”

When you see the jump in benchmark scores that o1 added to the already impressive GPT-4o figures, it won’t be long before an AI model aces the MMLU.

This is objectively true. pic.twitter.com/gorahh86ee

— Ethan Mollick (@emollick) September 17, 2024

Humanity’s Last Exam is asking people to submit questions that would genuinely surprise you if an AI model delivered the correct answer. They want PhD level exam questions, not the ‘how many Rs in Strawberry’ type that trip up some models.

Scale explained that “As existing tests become too easy, we lose the ability to distinguish between AI systems which can ace undergrad exams, and those which can genuinely contribute to frontier research and problem solving.”

If you have an original question that could stump an advanced AI model then you could have your name added as a co-author of the project’s paper and share in a pool of $500,000 that will be awarded to the best questions.

To give you an idea of the level the project is aiming at Scale explained that “if a randomly selected undergraduate can understand what is being asked, it is likely too easy for the frontier LLMs of today and tomorrow.”

There are a few interesting restrictions on the kinds of questions that can be submitted. They don’t want anything related to chemical, biological, radiological, nuclear weapons, or cyberweapons used for attacking critical infrastructure.

If you think you’ve got a question that meets the requirements then you can submit it here.

The post Humanity’s Last Exam wants your tough questions to stump AI appeared first on DailyAI.