DAI#57 – Tricky AI, exam challenge, and conspiracy cures

Welcome to this week’s roundup of AI news made by humans, for humans. This week, OpenAI told us that it’s pretty sure o1 is kinda safe. Microsoft gave Copilot a big boost. And a chatbot can cure your belief in conspiracy theories. Let’s dig in. It’s pretty safe We were caught up in the excitement of OpenAI’s release of its o1 models last week until we read the fine print. The model’s system card offers interesting insight into the safety testing OpenAI did and the results may raise some eyebrows. It turns out that o1 is smarter but also more The post DAI#57 – Tricky AI, exam challenge, and conspiracy cures appeared first on DailyAI.

Welcome to this week’s roundup of AI news made by humans, for humans.

This week, OpenAI told us that it’s pretty sure o1 is kinda safe.

Microsoft gave Copilot a big boost.

And a chatbot can cure your belief in conspiracy theories.

Let’s dig in.

It’s pretty safe

We were caught up in the excitement of OpenAI’s release of its o1 models last week until we read the fine print. The model’s system card offers interesting insight into the safety testing OpenAI did and the results may raise some eyebrows.

It turns out that o1 is smarter but also more deceptive with a “medium” danger level according to OpenAI’s rating system.

Despite o1 being very sneaky during testing, OpenAI and its red teamers say they’re fairly sure it’s safe enough to release. Not so safe if you’re a programmer looking for a job.

If OpenAI‘s o1 can pass OpenAI‘s research engineer hiring interview for coding — 90% to 100% rate…

……then why would they continue to hire actual human engineers for this position?

Every company is about to ask this question. pic.twitter.com/NIIn80AW6f

— Benjamin De Kraker

(@BenjaminDEKR) September 12, 2024

Copilot upgrades

Microsoft unleashed Copilot “Wave 2” which will give your productivity and content production an additional AI boost. If you were on the fence over Copilot’s usefulness these new features may be the clincher.

The Pages feature and the new Excel integrations are really cool. The way Copilot accesses your data does raise some privacy questions though.

More strawberries

If all the recent talk about OpenAI’s Strawberry project gave you a craving for the berry then you’re in luck.

Researchers have developed an AI system that promises to transform how we grow strawberries and other agricultural products.

This open-source application could have a huge impact on food waste, harvest yields, and even the price you pay for fresh fruit and veg at the store.

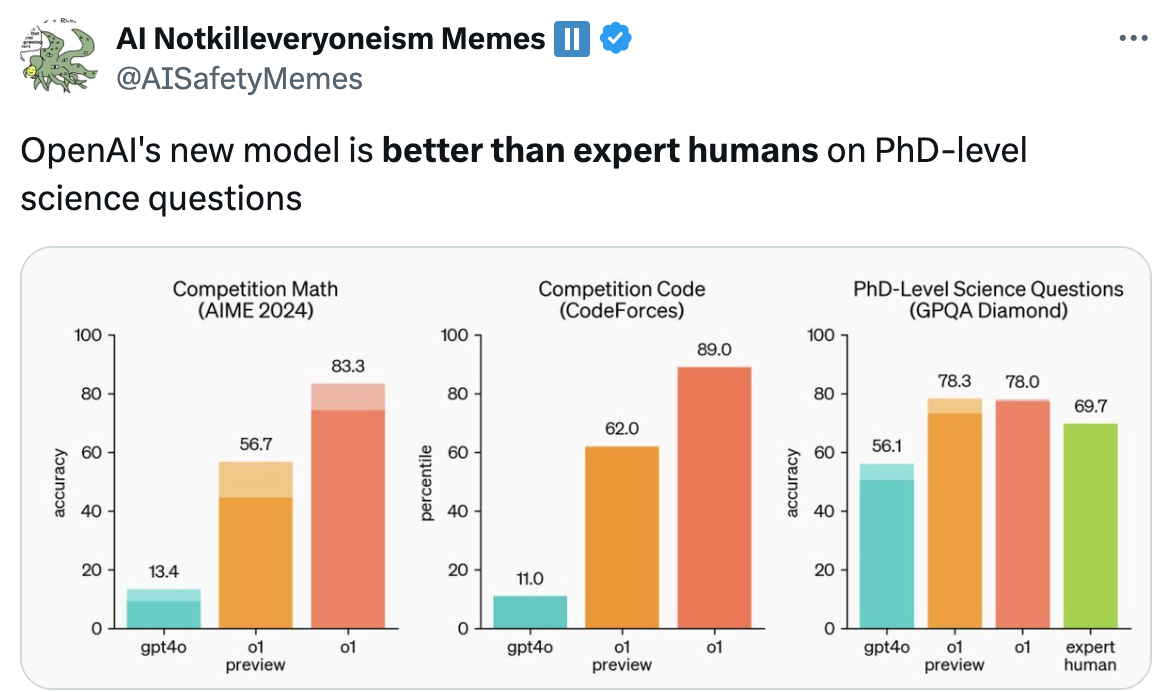

Too easy

AI models are getting so smart now that our benchmarks to measure them are just about obsolete. Scale AI and CAIS launched a project called Humanity’s Last Exam to fix this.

They want you to submit tough questions that you think could stump leading AI models. If an AI can answer PhD-level questions then we’ll get a sense of how close we are to achieving expert-level AI systems.

If you think you have a good one you could win a share of $500,000. It’ll have to be really tough though.

Curing conspiracies

I love a good conspiracy theory, but some of the things people believe are just crazy. Have you tried convincing a flat-earther with simple facts and reasoning? It doesn’t work. But what if we let an AI chatbot have a go?

Researchers built a chatbot using GPT-4 Turbo and they had impressive results in changing people’s minds about the conspiracy theories they believed in.

It does raise some awkward questions about how persuasive AI models are and who decides what ‘truth’ is.

Just because you’re paranoid, doesn’t mean they’re not after you.

Stay cool

Is having your body cryogenically frozen part of your backup plan? If so, you’ll be happy to hear AI is making this crazy idea slightly more plausible.

A company called Select AI used AI to accelerate the discovery of cryoprotectant compounds. These compounds stop organic matter from turning into crystals during the freezing process.

For now, the application is for better transport and storage of blood or temperature-sensitive medicines. But if AI helps them find a really good cryoprotectant, cryogenic preservation of humans could go from a moneymaking racket to a plausible option.

AI is contributing to the medical field in other ways that might make you a little nervous. New research shows that a surprising amount of doctors are turning to ChatGPT for help to diagnose patients. Is that a good thing?

If you’re excited about what’s happening in medicine and considering a career as a doctor you may want to rethink that according to this professor.

This is the final warning for those considering careers as physicians: AI is becoming so advanced that the demand for human doctors will significantly decrease, especially in roles involving standard diagnostics and routine treatments, which will be increasingly replaced by AI.… pic.twitter.com/VJqE6rvkG0

— Derya Unutmaz, MD (@DeryaTR_) September 13, 2024

In other news…

Here are some other clickworthy AI stories we enjoyed this week:

- Googles Notebook LM turns your written content into a podcast. This is crazy good.

- When Japan switches the world’s first zeta-class supercomputer on in 2030 it will be 1,000 times faster than the world’s current fastest supercomputer.

- SambaNova challenges OpenAI’s o1 model with an open-source Llama 3.1-powered demo.

- More than 200 tech industry players sign an open letter asking Gavin Newsom to veto the SB 1047 AI safety bill.

- Gavin Newsom signed two bills into law to protect living and deceased performers from AI cloning.

- Sam Altman departs OpenAI’s safety committee to make it more “independent”.

- OpenAI says the signs of life shown by ChatGPT in initiating conversations are just a glitch.

- RunwayML launches Gen-3 Alpha Video to Video feature to paid users of its app.

Gen-3 Alpha Video to Video is now available on web for all paid plans. Video to Video represents a new control mechanism for precise movement, expressiveness and intent within generations. To use Video to Video, simply upload your input video, prompt in any aesthetic direction… pic.twitter.com/ZjRwVPyqem

— Runway (@runwayml) September 13, 2024

And that’s a wrap.

It’s not surprising that AI models like o1 present more risk as they get smarter, but the sneakiness during testing was weird. Do you think OpenAI will stick to its self-imposed safety level restrictions?

The Humanity’s Last Exam project was an eye-opener. Humans are struggling to find questions tough enough for AI to solve. What happens after that?

If you believe in conspiracy theories, do you think an AI chatbot could change your mind? Amazon Echo is always listening, the government uses big tech to spy on us, and Mark Zuckerberg is a robot. Prove me wrong.

Let us know what you think, follow us on X, and send us links to cool AI stuff we may have missed.

The post DAI#57 – Tricky AI, exam challenge, and conspiracy cures appeared first on DailyAI.