Why does AI hallucinate?

On April 2, the World Health Organization launched a chatbot named SARAH to raise health awareness about things like how to eat well, quit smoking, and more.But like any other chatbot, SARAH started giving incorrect answers. Leading to a lot of internet trolls and finally, the usual disclaimer: The answers from the chatbot might not be accurate. This tendency to make things up, known as hallucination, is one of the biggest obstacles chatbots face. Why does this happen? And why can’t we fix it?Let’s explore why large language models hallucinate by looking at how they work. First, making stuff up is exactly what LLMs are designed to do. The chatbot draws responses from the large language model without looking up information in a database or using a search engine.A large language model contains billions and billions of numbers. It uses these numbers to calculate its responses from scratch, producing new sequences of words on the fly. A large language model is more like a vector than an encyclopedia.https://medium.com/media/260ffdc0d69d4e1186a4969c41c75c63/hrefLarge language models generate text by predicting the next word in the sequence. Then the new sequence is fed back into the model, which will guess the next word. This cycle then goes on. Generating almost any kind of text possible. LLMs just love dreaming.The model captures the statistical likelihood of a word being predicted with certain words. The likelihood is set when a model is trained, where the values in the model are adjusted over and over again until they meet the linguistic patterns of the training data. Once trained, the model calculates the score for each word in the vocabulary, calculating its likelihood to come next.So basically, all these hyped-up large language models do is hallucinate. But we only notice when it’s wrong. And the problem is that you won't notice it because these models are so good at what they do. And that makes trusting them hard.Can we control what these large language models generate? Even though these models are too complicated to be tinkered with, few believe that training them on even more data will reduce the error rate.You can also ensure performance by breaking responses step-by-step. This method, known as chain-of-thought prompting, can help the model feel confident about the outputs they produce, preventing them from going out of control.But this does not guarantee 100 percent accuracy. As long as the models are probabilistic, there is a chance that they will produce the wrong output. It is similar to rolling a dice even if you tamper with it to produce a result, there is a small chance it will produce something else.Another thing is that people believe these models and let their guard down. And these errors go unnoticed. Perhaps, the best fix for hallucinations is to manage the expectations we have of these chatbots and cross-verify the facts.Why does AI hallucinate? was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

On April 2, the World Health Organization launched a chatbot named SARAH to raise health awareness about things like how to eat well, quit smoking, and more.

But like any other chatbot, SARAH started giving incorrect answers. Leading to a lot of internet trolls and finally, the usual disclaimer: The answers from the chatbot might not be accurate. This tendency to make things up, known as hallucination, is one of the biggest obstacles chatbots face. Why does this happen? And why can’t we fix it?

Let’s explore why large language models hallucinate by looking at how they work. First, making stuff up is exactly what LLMs are designed to do. The chatbot draws responses from the large language model without looking up information in a database or using a search engine.

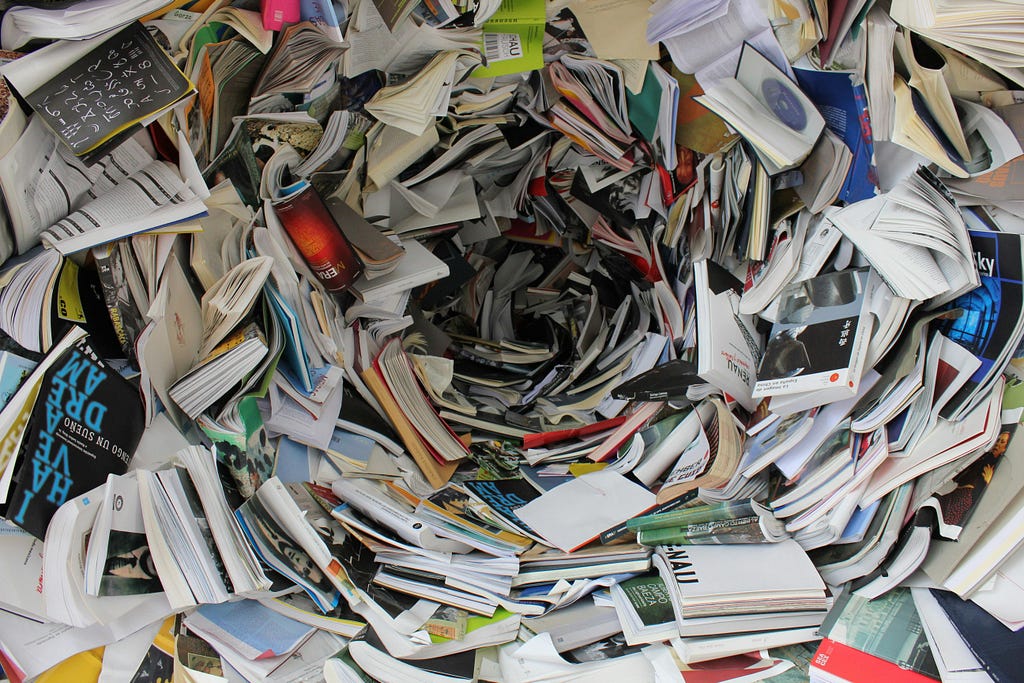

A large language model contains billions and billions of numbers. It uses these numbers to calculate its responses from scratch, producing new sequences of words on the fly. A large language model is more like a vector than an encyclopedia.https://medium.com/media/260ffdc0d69d4e1186a4969c41c75c63/href

Large language models generate text by predicting the next word in the sequence. Then the new sequence is fed back into the model, which will guess the next word. This cycle then goes on. Generating almost any kind of text possible. LLMs just love dreaming.

The model captures the statistical likelihood of a word being predicted with certain words. The likelihood is set when a model is trained, where the values in the model are adjusted over and over again until they meet the linguistic patterns of the training data. Once trained, the model calculates the score for each word in the vocabulary, calculating its likelihood to come next.

So basically, all these hyped-up large language models do is hallucinate. But we only notice when it’s wrong. And the problem is that you won't notice it because these models are so good at what they do. And that makes trusting them hard.

Can we control what these large language models generate? Even though these models are too complicated to be tinkered with, few believe that training them on even more data will reduce the error rate.

You can also ensure performance by breaking responses step-by-step. This method, known as chain-of-thought prompting, can help the model feel confident about the outputs they produce, preventing them from going out of control.

But this does not guarantee 100 percent accuracy. As long as the models are probabilistic, there is a chance that they will produce the wrong output. It is similar to rolling a dice even if you tamper with it to produce a result, there is a small chance it will produce something else.

Another thing is that people believe these models and let their guard down. And these errors go unnoticed. Perhaps, the best fix for hallucinations is to manage the expectations we have of these chatbots and cross-verify the facts.

Why does AI hallucinate? was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.