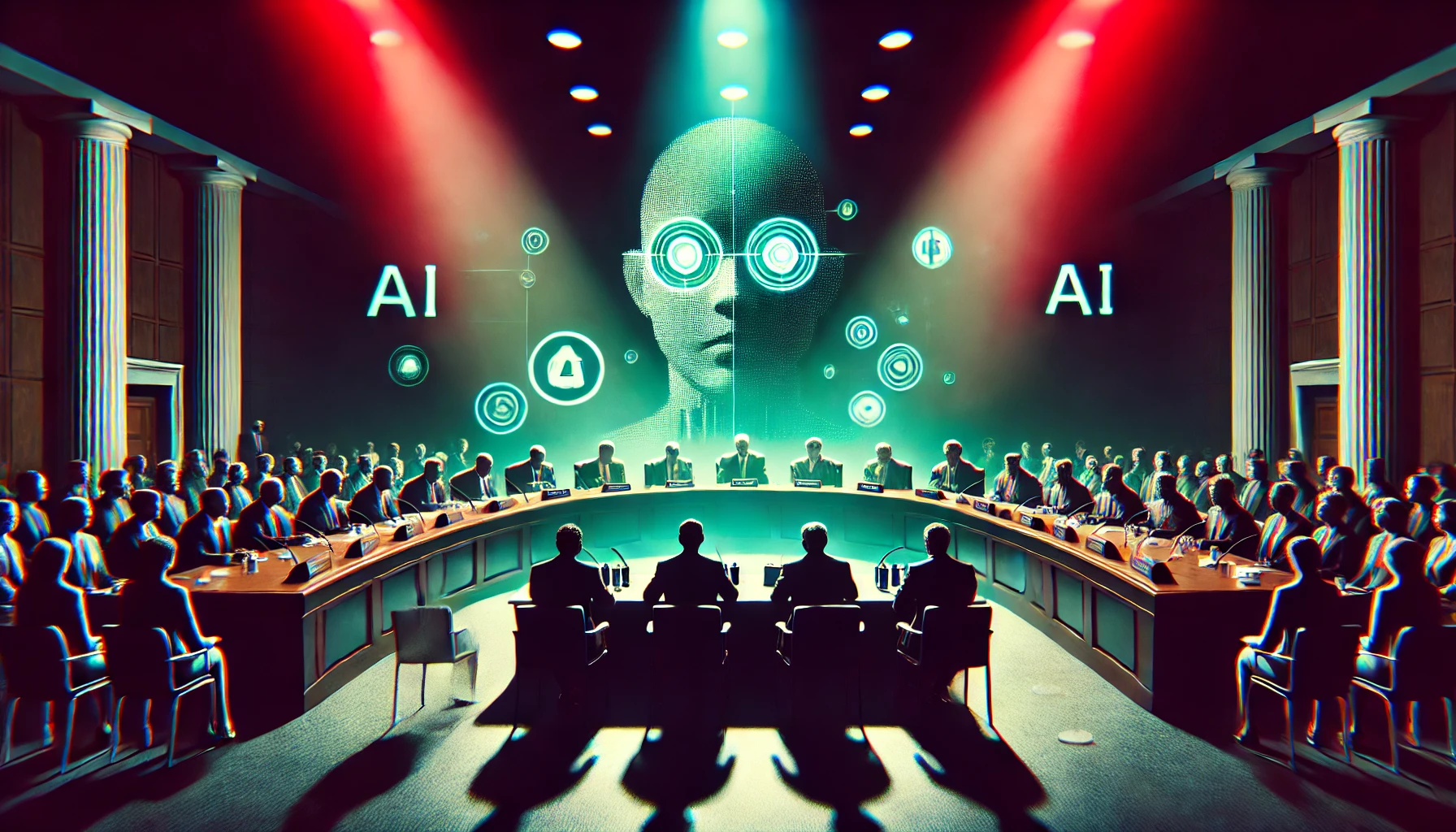

Senate probes OpenAI’s safety and governance after whistleblower claims

OpenAI has found itself at the center of a Senate inquiry following allegations of rushed safety testing. Five senators, led by Brian Schatz (D-Hawaii), demanded that the company provide detailed information about its safety practices and employee agreements. The inquiry comes in the wake of a Washington Post report suggesting that OpenAI may have compromised on safety protocols in its rush to release GPT-4 Omni, its latest AI model. Meanwhile, whistleblowers, including high-profile researchers from OpenAI’s dismantled “superalignment team,” have raised concerns about restrictive employee non-disclosure agreements (NDAs). Senators’ letter to OpenAI In a strongly worded letter to OpenAI CEO The post Senate probes OpenAI’s safety and governance after whistleblower claims appeared first on DailyAI.

OpenAI has found itself at the center of a Senate inquiry following allegations of rushed safety testing.

Five senators, led by Brian Schatz (D-Hawaii), demanded that the company provide detailed information about its safety practices and employee agreements.

The inquiry comes in the wake of a Washington Post report suggesting that OpenAI may have compromised on safety protocols in its rush to release GPT-4 Omni, its latest AI model.

Meanwhile, whistleblowers, including high-profile researchers from OpenAI’s dismantled “superalignment team,” have raised concerns about restrictive employee non-disclosure agreements (NDAs).

Senators’ letter to OpenAI

In a strongly worded letter to OpenAI CEO Sam Altman, five senators demanded detailed information about the company’s safety practices and treatment of employees.

The letter raises concerns about OpenAI’s commitment to responsible AI development and its internal policies.

“Given OpenAI’s position as a leading AI company, it is important that the public can trust in the safety and security of its systems,” the senators write.

They go on to question the “integrity of the company’s governance structure and safety testing, its employment practices, its fidelity to its public promises and mission, and its cybersecurity policies.”

The senators, led by Brian Schatz (D-Hawaii), have set an August 13 deadline for OpenAI to respond to a series of pointed questions. These include whether the company will honor its commitment to dedicate 20% of computing resources to AI safety research and if it will allow independent experts to test its systems before release.

On the topic of restrictive employee agreements, the letter asks OpenAI to confirm it “will not enforce permanent non-disparagement agreements for current and former employees” and to commit to “removing any other provisions from employment agreements that could be used to penalize employees who publicly raise concerns about company practices.”

OpenAI later took to X to reassure the public about its commitment to safety.

“Making sure AI can benefit everyone starts with building AI that is helpful and safe. We want to share some updates on how we’re prioritizing safety in our work,” the company stated in a recent post.

OpenAI emphasized its Preparedness Framework, designed to evaluate and protect against risks posed by increasingly powerful AI models.

“We won’t release a new model if it crosses a ‘medium’ risk threshold until we’re confident we can do so safely,” the company assured.

We are developing levels to help us and stakeholders categorize and track AI progress. This is a work in progress and we'll share more soon.

— OpenAI (@OpenAI) OpenAI/status/1815708158339526664?ref_src=twsrc%5Etfw”>July 23, 2024

Addressing the allegations of restrictive employee agreements, OpenAI stated, “Our whistleblower policy protects employees’ rights to make protected disclosures. We also believe rigorous debate about this technology is important and have made changes to our departure process to remove non-disparagement terms.”

The company also mentioned recent steps to bolster its safety measures.

In May, OpenAI’s Board of Directors launched a new Safety and Security committee, which includes retired US Army General Paul Nakasone, a leading cybersecurity expert.

OpenAI maintains its stance on AI’s benefits. “We believe that frontier AI models can greatly benefit society,” the company stated while acknowledging the need for continued vigilance and safety measures.

Despite some progress, the chances of passing comprehensive AI legislation this year are low as attention shifts towards the 2024 election.

In the absence of new laws from Congress, the White House has largely relied on voluntary commitments from AI companies to ensure they create safe and trustworthy AI systems.

The post Senate probes OpenAI’s safety and governance after whistleblower claims appeared first on DailyAI.