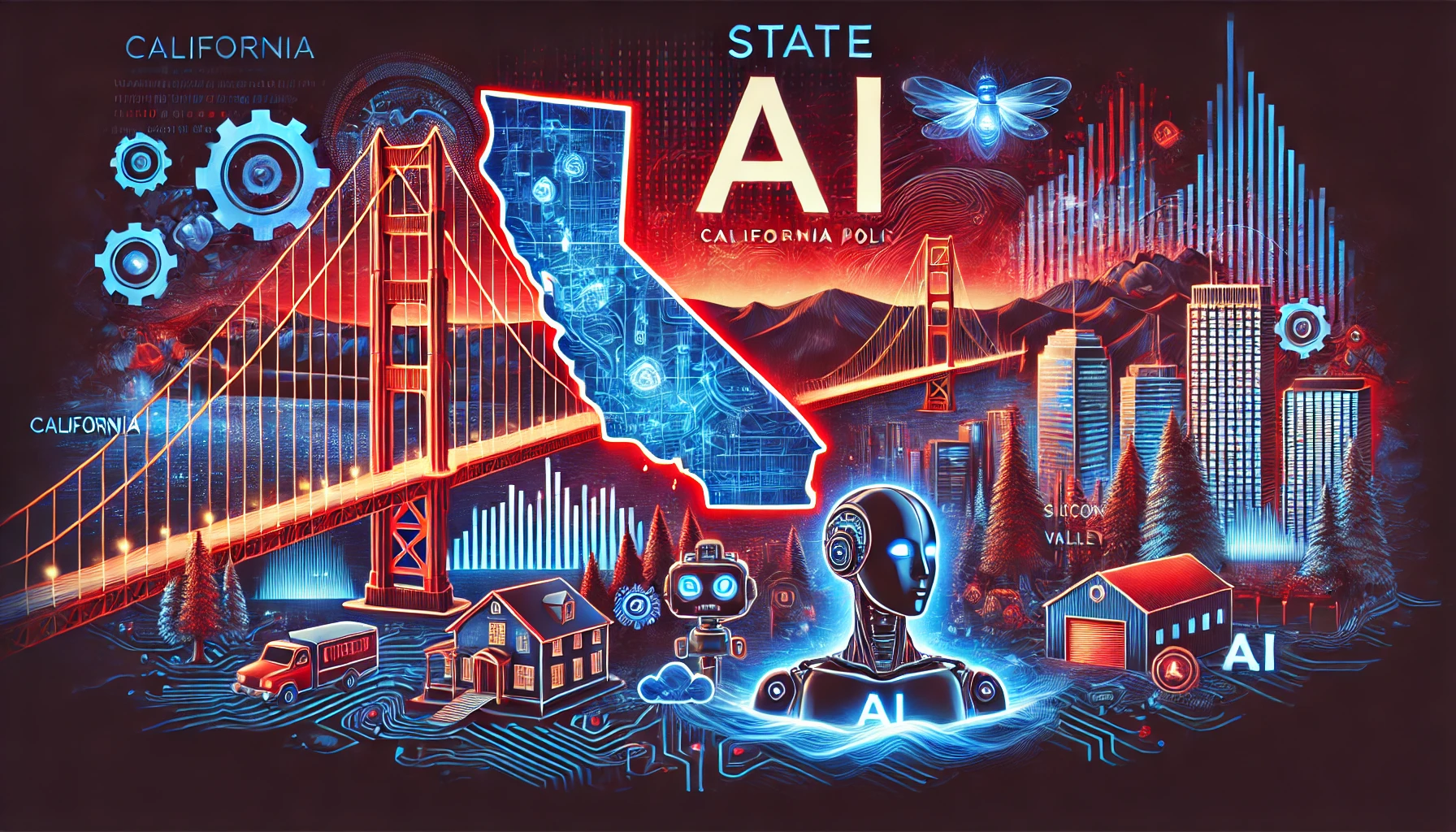

Proposed California bills could be disastrous for AI development

California is considering several bills to regulate AI with SB 1047 and AB 3211 set to have a huge impact on AI developers if they become law next month. Arguments over AI risks and how to mitigate them continue and in the absence of US federal laws, California has drafted laws that could set the precedent for other states. SB 1047 and AB 3211 both passed the California State Assembly and could become law next month when the Senate votes on them. Supporters of the bills say they provide long-overdue protections against the risks of unsafe AI models and AI-generated The post Proposed California bills could be disastrous for AI development appeared first on DailyAI.

California is considering several bills to regulate AI with SB 1047 and AB 3211 set to have a huge impact on AI developers if they become law next month.

Arguments over AI risks and how to mitigate them continue and in the absence of US federal laws, California has drafted laws that could set the precedent for other states.

SB 1047 and AB 3211 both passed the California State Assembly and could become law next month when the Senate votes on them.

Supporters of the bills say they provide long-overdue protections against the risks of unsafe AI models and AI-generated synthetic content. Critics say the requirements mandated in the bills will kill AI development in the state as the requirements are unworkable.

Here’s a quick look at the two bills and why they may have Californian AI developers considering incorporating in other states.

SB 1047

The SB 1047 “Safe and Secure Innovation for Frontier Artificial Intelligence Models Act” will apply to makers of models that cost $100 million or more to train.

These developers will be required to implement additional safety checks and will be liable if their models are used to cause “critical harm”.

The bill defines “critical harm” as the “creation or use of a chemical, biological, radiological, or nuclear weapon in a manner that results in mass casualties…or at least five hundred million dollars ($500,000,000) of damage resulting from cyberattacks on critical infrastructure.

This might sound like a good idea to make sure creators of advanced AI models take sufficient care but is it practical?

A car manufacturer should be liable if their vehicle is unsafe, but should they be liable if a person intentionally drives into someone using their vehicle?

Meta’s AI chief scientist, Yann LeCun doesn’t think so. LeCun is critical of the bill and said, “Regulators should regulate applications, not technology…Making technology developers liable for bad uses of products built from their technology will simply stop technology development.”

The bill will also make creators of models liable if someone creates a derivative of their model and causes harm. That requirement would be the death of open-weight models which, ironically, the Department of Commerce has endorsed.

SB 1047 passed the California Senate in May with a 32-1 vote and will become law if it passes in the Senate in August.

AB 3211

The California Provenance, Authenticity, and Watermarking Standards Act (AB 3211) aims to ensure transparency and accountability in the creation and distribution of synthetic content.

If something was generated by AI, the bill says it needs to be labeled or have a digital watermark to make that clear.

The watermark needs to be “Maximally indelible…designed to be as difficult to remove as possible.” The industry has adopted the C2PA provenance standard, but it’s trivial to remove the metadata from content.

The bill requires every conversational AI system to “obtain a user’s affirmative consent before beginning the conversation.”

So every time you use ChatGPT or use Siri it would have to start the conversation with ‘I am an AI. Do you understand and consent to this?’ Every time.

Another requirement is that creators of generative AI models must keep a register of AI-generated content that their models produced where those could be construed as human-generated.

This would likely be impossible for creators of open models. Once someone downloads and runs the model locally, how would you monitor what they generate?

The bill applies to every model distributed in California, regardless of the size or who made it. If it passes the Senate next month, AI models that don’t comply will not be allowed to be distributed or even hosted in California. Sorry about that HuggingFace and GitHub.

If you find yourself on the wrong side of the law, it allows for fines of “$1 million or 5% of violator’s global annual revenue, whichever is greater.” That equates to billions of dollars for the likes of Meta or Google.

AI regulation is starting to shift Silicon Valley’s political support toward Trump allies’ “Make America First in AI” approach. If SB 1047 and AB 3211 are signed into law next month it could trigger an exodus of AI developers fleeing to less regulated states.

The post Proposed California bills could be disastrous for AI development appeared first on DailyAI.