OpenAI debuts the “o1” series, pushing the boundaries of AI reasoning

OpenAI has released new advanced reasoning models dubbed the”o1” series. o1 currently comes in two versions – o1-preview and o1-mini – and is designed to perform complex reasoning tasks, marking what OpenAI describes as “a new paradigm” in AI development. “This is what we consider the new paradigm in these models,” explained Mira Murati, OpenAI’s Chief Technology Officer, in a statement to Wired. “It is much better at tackling very complex reasoning tasks.” Unlike previous iterations that excelled primarily by scale, e.g., by throwing compute at a problem, o1 aims to replicate the human-like thought process of “reasoning through” problems. The post OpenAI debuts the “o1” series, pushing the boundaries of AI reasoning appeared first on DailyAI.

OpenAI has released new advanced reasoning models dubbed the”o1” series.

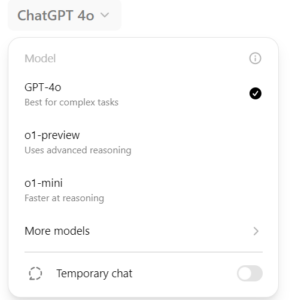

o1 currently comes in two versions – o1-preview and o1-mini – and is designed to perform complex reasoning tasks, marking what OpenAI describes as “a new paradigm” in AI development.

“This is what we consider the new paradigm in these models,” explained Mira Murati, OpenAI’s Chief Technology Officer, in a statement to Wired. “It is much better at tackling very complex reasoning tasks.”

Unlike previous iterations that excelled primarily by scale, e.g., by throwing compute at a problem, o1 aims to replicate the human-like thought process of “reasoning through” problems.

Rather than generating a single answer, the model works step-by-step, considering multiple approaches and revising itself as necessary, a method known as “chain of thought” prompting.

This allows it to solve complex problems in math, coding, and other fields with a level of precision that existing models, including GPT-4o, struggle to achieve.

We’re releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond.

These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. https://t.co/peKzzKX1bu

— OpenAI (@OpenAI) September 12, 2024

Mark Chen, OpenAI’s Vice President of Research, elaborated on how o1 distinguishes itself by enhancing the learning process. “The model sharpens its thinking and fine-tunes the strategies that it uses to get to the answer,” said Chen.

He demonstrated the model with several mathematical puzzles and advanced chemistry questions that previously stumped GPT-4o.

One puzzle that baffled earlier models asked: “A princess is as old as the prince will be when the princess is twice as old as the prince was when the princess’s age was half the sum of their present age. What is the age of the prince and princess?”

The o1 model determined the correct answer: the prince is 30, and the princess is 40.

How to access o1

ChatGPT Plus users can already access o1 from inside ChatGPT.

That’s a surprise, as GPT-4o’s voice feature is still rolling out months after its demos. I don’t think many thought o1 would spontaneously land like this.

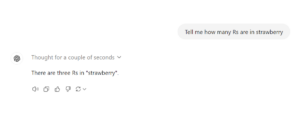

o1 seems related to OpenAI’s codenamed “Strawberry” project. Now here’s a funny thing: most AI models don’t know how many Rs are in “strawberry.” It trips their reasoning skills.

I tested this in o1. Lo and behold, it got it right. Clearly, o1’s approach to reasoning helps solve such questions efficiently.

Sam Altman’s recent spate of strawberry-related social media talk might be linked to this famous strawberry-flavoured AI problem and o1’s codename “Project Strawberry.” If not, it’s a weird coincidence.

A step change in problem-solving

The o1 model’s ability to “reason” its way through problems represents progress in AI – something that could prove quite groundbreaking if its real-world performance is proven ‘in the wild.’

The new models have already shown strong performance in tests like the American Invitational Mathematics Examination (AIME).

According to OpenAI, the new model solved 83% of the problems presented in the AIME, compared to just 12% by GPT-4o.

While o1’s strengths are evident, it does come with trade-offs.

The model takes longer to generate answers due to its more thoughtful methodologies. We don’t yet know how pronounced this will be and what its impact will be on usability.

o1’s strange origins

o1 comes off the back of talk surrounding an OpenAI project codenamed “Strawberry,” which emerged in late 2023.

It was rumoured to be an AI model capable of autonomous web exploration, designed to conduct “deep research” rather than simple information retrieval.

Talk surrounding Strawberry swelled not long ago when The Information leaked some info about OpenAI’s internal projects. Namely, OpenAI is allegedly developing two forms of Strawberry.

- One is a smaller, simplified version intended for integration into ChatGPT. It aims to enhance reasoning capabilities in scenarios where users require more thoughtful, detailed answers rather than quick responses. This sounds like o1.

- Another is a larger, more powerful version that is used to generate high-quality “synthetic” training data for OpenAI’s next flagship language model, codenamed “Orion.” This may or may not be linked to o1.

OpenAI has provided no direct clarification on what Strawberry truly is.

A complement, not a replacement

Murati emphasized that o1 is not designed to replace GPT-4o but to complement it.

“There are two paradigms,” she said. “The scaling paradigm and this new paradigm. We expect that we will bring them together.”

While OpenAI continues to develop GPT-5, which will likely be even larger and more powerful than GPT-4o, future models could incorporate the reasoning functions of o1.

This fusion could address the persistent limitations of large language models (LLMs), such as their struggle with seemingly simple problems that require logical deduction.

Anthropic and Google are allegedly racing to integrate similar features into their models. Google’s AlphaProof project, for instance, also combines language models with reinforcement learning to tackle difficult math problems.

However, Chen believes that OpenAI has the edge. “I do think we have made some breakthroughs there,” he said, “I think it is part of our edge. It’s actually fairly good at reasoning across all domains.”

Yoshua Bengio, a leading AI researcher and recipient of the prestigious Turing Award, lauded the progress but urged caution.

“If AI systems were to demonstrate genuine reasoning, it would enable consistency of facts, arguments, and conclusions made by the AI,” he told the FT.

Safety and ethical considerations

As part of its commitment to responsible AI, OpenAI has bolstered the safety features of the o1 series, including “on-by-default” content safety tools.

These tools help prevent the model from producing harmful or unsafe outputs.

“We’re pleased to announce that Prompt Shields and Protected Materials for Text are now generally available in Azure OpenAI Service,” OpenAI stated in a Microsoft blog post.

The o1 series is available for early access in Microsoft’s Azure AI Studio and GitHub Models, with a broader release planned soon.

OpenAI hopes that o1 will enable developers and enterprises to innovate more cost-effectively, aligning with their broader mission of making AI more accessible to corporate users.

“We believe that it’ll allow us to ship intelligence cheaper,” concluded Chen. “And I think that really is the core mission of our company.”

All in all, an exciting releasing. It will be very interesting to see what questions, problems, and tasks o1 thrives on.

The post OpenAI debuts the “o1” series, pushing the boundaries of AI reasoning appeared first on DailyAI.

.jpg)