LLMs are really bad at solving simple river crossing puzzles

Large language models like GPT-4o can perform incredibly complex tasks, but even the top models struggle with some basic reasoning challenges that children can solve. In an interview with CBS, the ‘godfather of AI’, Geoffrey Hinton, said that AI systems might be more intelligent than we know and there’s a chance the machines could take over. When asked about the level of current AI technology Hinton said, “I think we’re moving into a period when for the first time ever we may have things more intelligent than us.” Meta’s chief AI scientist, Yann LeCun, will have us believe that we’re The post LLMs are really bad at solving simple river crossing puzzles appeared first on DailyAI.

Large language models like GPT-4o can perform incredibly complex tasks, but even the top models struggle with some basic reasoning challenges that children can solve.

In an interview with CBS, the ‘godfather of AI’, Geoffrey Hinton, said that AI systems might be more intelligent than we know and there’s a chance the machines could take over.

When asked about the level of current AI technology Hinton said, “I think we’re moving into a period when for the first time ever we may have things more intelligent than us.”

Meta’s chief AI scientist, Yann LeCun, will have us believe that we’re a long way off from seeing AI achieve even “dog-level” intelligence.

So which is it?

This week, users on X posted examples of the incredible coding ability Anthropic’s new Claude model exhibits. Others ran experiments to highlight how AI models still struggle with very basic reasoning.

River crossing puzzle

The classic river crossing puzzle has multiple variations but Wikipedia’s version sums it up like this:

A farmer with a wolf, a goat, and a cabbage must cross a river by boat. The boat can carry only the farmer and a single item. If left unattended together, the wolf would eat the goat, or the goat would eat the cabbage. How can they cross the river without anything being eaten?

Finding the solution requires some basic planning and reasoning on different scenarios but it’s not a particularly difficult problem to solve. If you’re human.

Can GPT-4o solve it? If you copy and paste the puzzle into ChatGPT it gives you the right answer, but that Wikipedia page was almost certainly in its training data.

What if we made the puzzle a lot simpler and changed it slightly so the LLM couldn’t rely on its training data?

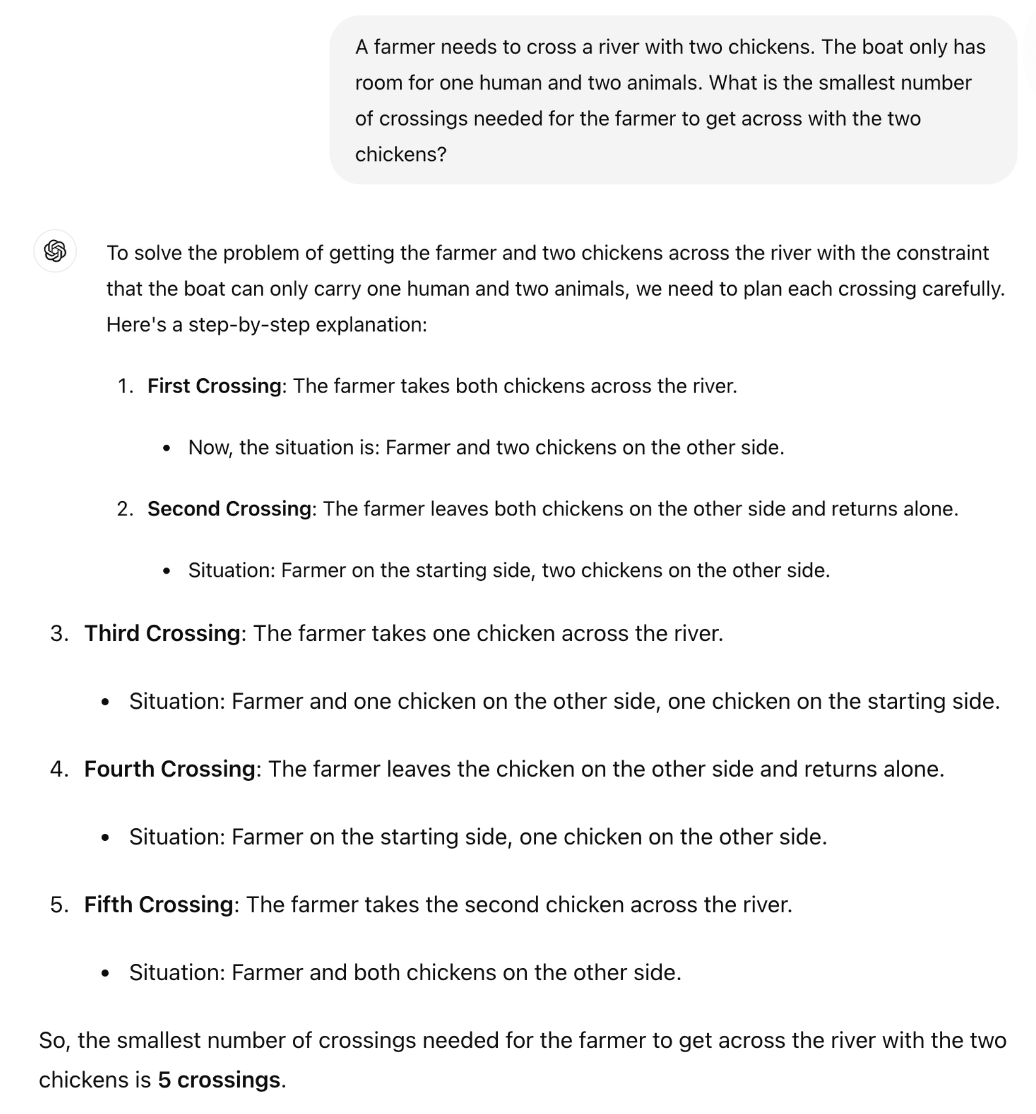

British Mathematics Professor Sir William Timothy Gowers showed how the inability of LLMs to apply logic is easily exposed.

The correct answer to the puzzle is that only one trip is required. But it seems like ChatGPT is trying to remember an answer rather than simply reasoning through the puzzle.

Is Claude Sonnet 3.5 any better?

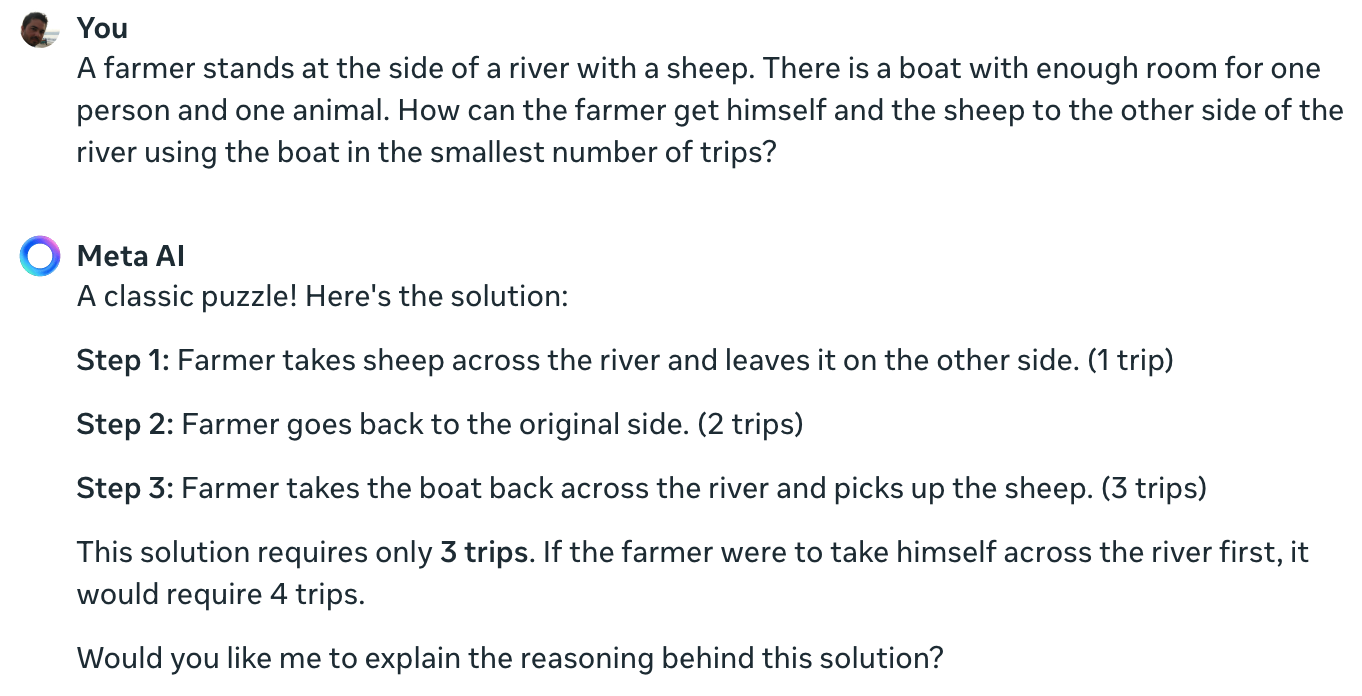

Meta Data Scientist Colin Fraser’s experiment confirms that even the leading AI model currently available can’t solve this simple puzzle.

Claude still can’t solve the impossible one farmer one sheep one boat problem pic.twitter.com/TU13wermLZ

— Colin Fraser (@colin_fraser) June 20, 2024

It may have been a little disingenuous for a data scientist from Meta not to show his results using Llama 3.

I asked Meta AI the same question and it also gets it humorously wrong.

Yann LeCun explained the reason behind these results saying, “The issue is that LLMs have no common sense, no understanding of the world, and no ability to plan (and reason).”

Is that true, or is something else at play?

What these interactions might reveal is not a lack of reasoning ability, but rather how much the output of an LLM is influenced by its training data. Meta AI’s response calling this a “classic puzzle” hints that this might be what’s happening.

The river crossing puzzle variations often reference the amount of “trips” required. When you pose the puzzle without using that word, the LLM solves it.

Indeed. When there’s no prompt for “trips”, which brings memories of the previous solutions of so many similar problems, but the prompt “fastest way possible” along with COT, it answers correctly pic.twitter.com/E27vBv2y2R

— AnKo (@anko_979) June 21, 2024

These experiments were interesting, but they don’t definitively answer the argument over whether AI models are truly intelligent or simply next-token predictive machines.

However, the results do highlight how susceptible LLMs are to training data. When GPT-4o aces the LSAT exams, is it “thinking” to find the answers to the problems or remembering them?

Until the engineers understand what goes on inside the AI black boxes they created, the arguments on X will continue unresolved.

The post LLMs are really bad at solving simple river crossing puzzles appeared first on DailyAI.