GPT-4o Rolls Out: New Features and Voice Mode Preview

GPT-4o has launched with upgraded capabilities in ChatGPT, offering enhanced text and image processing for all users. It's faster, more cost-effective, and promises exciting new voice and video features soon. Experience the future of AI today!

Just released: the OpenAI game-changer for GPT-4o (“o” for “omni”).

No text prompts! ????????

With this model, GPT-4o can both understand and respond to spoken language in real time—that's just about any form of normal conversation.

It can react to audio inputs with as little as 232 milliseconds, on average, which is barely 320 milliseconds of human response time during a conversation.

It matches GPT-4 Turbo performance on text in English and code with significant improvement on text in non-English languages, while also being much faster and 50% cheaper in the API.

Just think how cool it might be to enter into an actual conversation with AI, asking follow-up questions, in response to which you get answers that are sensitive to your tone.

But that's not where the GPT-4o stops; it can be seen by video input.

Stuck with a problem in math? Just show the GPT-4o a picture. Do you need some help explaining to someone what this complex code is doing? Just point the camera, and GPT-4o will take you through it.

These groundbreaking vision capabilities, together with a significant speed increase—think 10 times faster—and support for 50 languages, truly open doors for education, communication, and content creation.

Remember, GPT-4o is still in progress regarding vision-based applications. It might analyze an image and understand its content in some situations, but accuracy wouldn't be perfect in all situations.

Nevertheless, the potential for future improvements is really exciting.

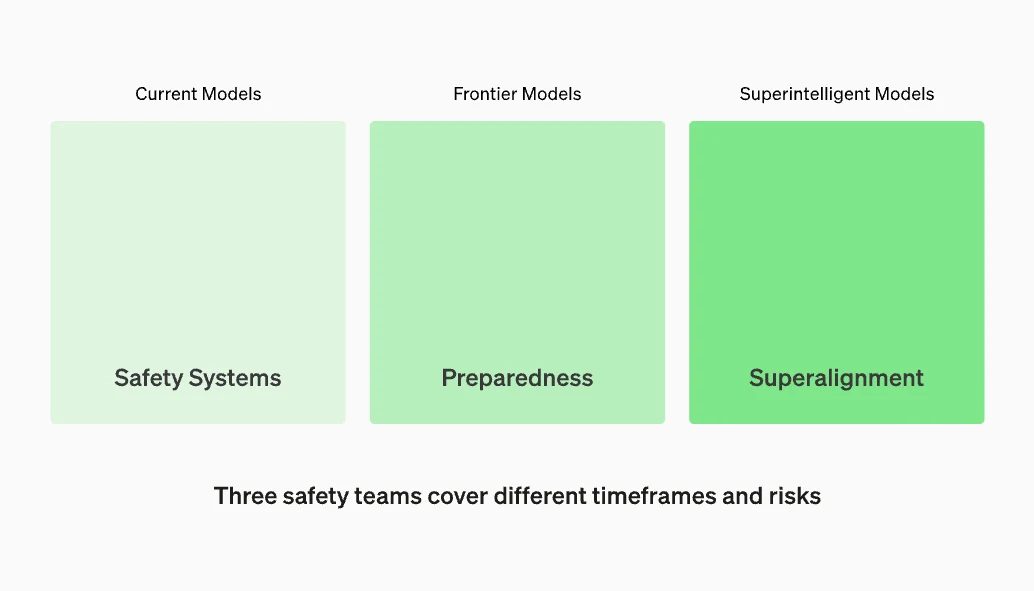

GPT-4o Safety and Limitation

Safety features in GPT-4o include cross-modality safety techniques, filtering in its training data, and fine-tuning of behavior after training.

The team has operationalized its Preparedness Framework to continue assessments within the boundaries of their voluntary commitments.

In independent assessments in cybersecurity, CBRN, persuasion, and autonomy, GPT-4o's risk is no more than medium. The assessment has been on the automated and human processes during the training, an examination of the pre-and post-safety-mitigation versions, and checks for ad-hoc prompts.

GPT-4o has been extensively externally red-teamed with more than 70 experts in social psychology, bias, fairness, and misinformation to drive iterative enhancement of safety interventions; new risks will be addressed as identified.

GPT-4o is an implementation of deep learning, which goes out of its way to ensure practical usability. This marked the first time the level model was implemented and rolled out iteratively after being extensively accessed by the Red Team.

Free AI for Everybody: A Look Into the Future

GPT-4o is now live for free users, with the limit on messages increased by up to five times.

Over the next couple of weeks, ChatGPT Plus will introduce a new version of Voice Mode with GPT-4o in Alpha.

GPT-4o is also live for developers in the API, both as a text and vision model, with performance up to twice as fast, half the cost, and rate limits up to five times higher than its forerunner, GPT-4 Turbo.

In the next few weeks, the API will be rolled out via a select group of trusted partners, further extending support for new audio and video capabilities.

It would dramatically change how people can freely use, and directly interact, and how much stuff one could do with GPT-4o.

Ready to experience the future of AI in conversations?

Try GPT-4o now and experience the difference in the way you learn, create, and communicate!