A new thesis for the Fermi Paradox: is AI the Great Filter or a cosmic colonizer?

The vastness of the universe has long captivated human imagination, prompting us to wonder: are we alone? It’s a question that has fascinated humanity for millennia, and today, we have the technology – such as radio telescopes – to push the search for extraterrestrial intelligence (SETI) deeper into space. We have yet to find anything. While no definitive evidence of extraterrestrial life has been publicly disclosed, the search continues, and the possibility remains open. That’s despite there being billions of potentially habitable worlds in the Milky Way alone, with some 1,780 confirmed exoplanets (planets beyond our solar system), around 16 The post A new thesis for the Fermi Paradox: is AI the Great Filter or a cosmic colonizer? appeared first on DailyAI.

The vastness of the universe has long captivated human imagination, prompting us to wonder: are we alone?

It’s a question that has fascinated humanity for millennia, and today, we have the technology – such as radio telescopes – to push the search for extraterrestrial intelligence (SETI) deeper into space.

We have yet to find anything. While no definitive evidence of extraterrestrial life has been publicly disclosed, the search continues, and the possibility remains open.

That’s despite there being billions of potentially habitable worlds in the Milky Way alone, with some 1,780 confirmed exoplanets (planets beyond our solar system), around 16 of which are located in their star’s habitable zone.

Some, like the ‘super-Earth’ Kepler-452b, are thought to be remarkably similar to our own planet.

You don’t need a perfect environment to support life, either. Extremophile bacteria on Earth are capable of living in some of the harshest conditions found on our planet.

But it’s not just microbes that can thrive in extreme environments. The Pompeii worm, for example, lives in hydrothermal vents on the ocean floor and can withstand temperatures up to 176°F (80°C).

Tardigrades, also known as water bears, can survive in the vacuum of space, endure extreme radiation, and withstand pressures six times greater than those found in the deepest parts of the ocean.

The hardiness of life on Earth, combined with the sheer volume of habitual worlds, leads many scientists to agree that alien existence is statistically as good as certain.

If that’s the case, where is extraterrestrial life lurking? And why won’t it reveal itself?

From the Fermi Paradox to the Great Filter

Those questions popped up in a casual conversation between physicists Enrico Fermi, Edward Teller, Herbert York, and Emil Konopinski in 1950.

Fermi famously asked, “Where is everybody?” or “Where are they?” (the exact wording is not known).

The now widely known Fermi Paradox is formulated as such: given the vast number of stars and potentially habitable planets in our galaxy, why haven’t we detected signs of alien civilizations?

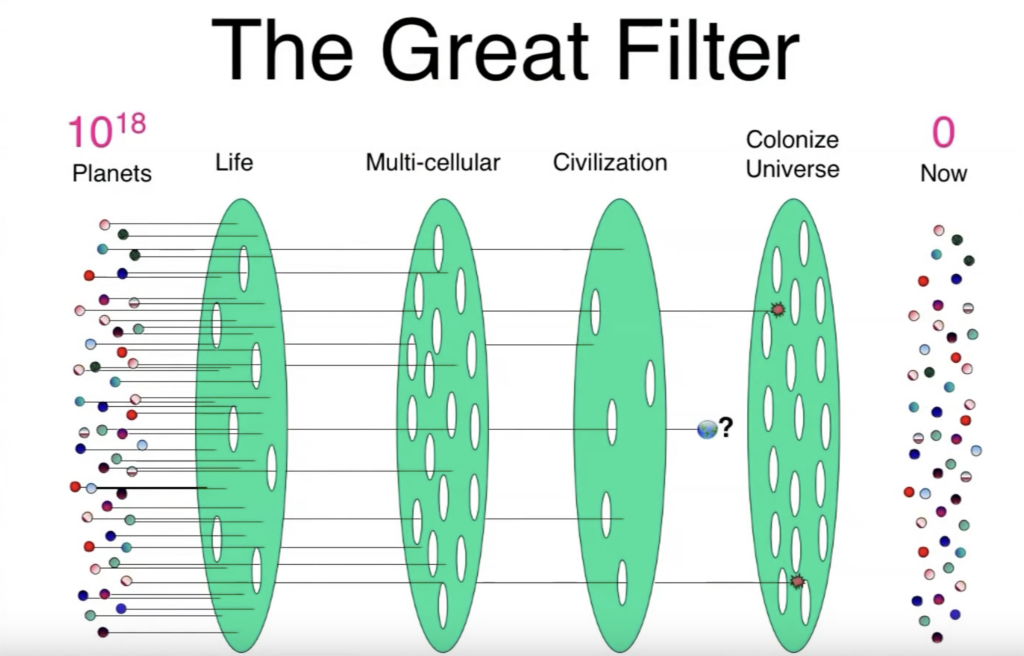

As the Fermi Paradox entered mainstream science, numerous hypotheses have attempted to counter, address, rectify, or reinforce it, including the concept of the “Great Filter,” introduced by economist Robin Hanson in 1998.

The Great Filter hypothesis posits that there exists a developmental stage or hurdle that is extremely difficult or nearly impossible for life to surpass.

In other words, civilizations predictably and invariably fail, whether due to resource depletion, natural hazards, interplanetary threats, or other uncontrolled existential risks.

One quirk to the Great Filter is that we don’t know if it’s behind us or in front of us.

If the filter is behind us – for example, if the emergence of life itself is an extremely rare event – it suggests that we’ve overcome the hardest part and might be rare or even alone in the universe.

This scenario, while potentially isolating, is optimistic about our future prospects.

However, if the Great Filter lies ahead of us, it could spell doom for our long-term survival and explain why we don’t see evidence of other civilizations.

The Great Filter is compounded by space’s immense size and the short timelines associated with advanced civilization.

If, for argument’s sake, humanity were to destroy itself in the next 100 years, the technological age would have barely lasted 500 years end-to-end.

That’s an exceptionally small window for us to detect aliens or for aliens to detect us before the Great Filter takes hold.

AI brings new puzzles to the Fermi Paradox

The silence of the cosmos has spawned numerous hypotheses, but recent developments in AI have added intriguing new dimensions to this age-old puzzle.

AI presents the possibility of a form of non-biological AI life that could persist nearly infinitely, in both physical and digital forms.

It could outlast all biological civilizations, triggering or accelerating their demise, thus instigating the “Great Filter” that prevents intersolar, intergalactic colonization.

This hypothesis, recently proposed in an essay by the astronomer Michael A. Garrett, suggests that developing artificial super intelligence (ASI), also known as artificial general intelligence (AGI), could be a critical juncture for civilizations.

ASI/AGI development might explain the Fermi Paradox by acting as the Great Filter civilizations can’t seem to pass through.

As Garrett explains:

“The development of artificial intelligence (AI) on Earth is likely to have profound consequences for the future of humanity. In the context of the Fermi Paradox, it suggests a new solution in which the emergence of AI inevitably leads to the extinction of biological intelligence and its replacement by silicon-based life forms.”

Garrett’s hypothesis is rooted in the idea that as civilizations advance, they invariably develop AI. It could either supplant its biological creators or merge with them in ways that fundamentally alter the civilization’s nature and goals.

To mitigate the risk of AI destroying the planet in some way, Garrett calls for regulation, aligning with influential figures in the field who also warn of AI’s existential risks, like Yoshio Bengio, Max Tegmark, and George Hinton, as well as those outside of the AI sector, like the late Stephen Hawking.

However, the magnitude of AI’s risks is hotly debated, with others, like Yann LeCun (one of the so-called ‘AI godfathers alongside Bengio and Hinton), arguing that AI risks are vastly overblown.

Nevertheless, it’s a tantalizing hypothesis.

In its thirst for a technological antidote to societal and environmental challenges, humanity slurps from the poison chalice of AI – signaling its downfall like potentially millions of similar civilizations before it.

The AI colonization hypothesis

While the idea of AI as a Great Filter presents a compelling explanation for the Fermi Paradox, it also presents a captivating contradiction.

If AI is advanced enough to replace or fundamentally alter its creators, wouldn’t it also be capable of rapid cosmic expansion and colonization? Why would it stop at destroying us?

Of course, the manner in which AI might lead to our demise is manifold. We might use it to build chemical or biological weapons that unleash a plague upon all of humanity, for example.

But, if potentially millions, even billions, of Earth-like worlds have fallen foul to this Great Filter, at least some ‘versions’ of ASI might push beyond their worlds to continue some form of intersolar conquest – even if just for self-preservational purposes.

To examine this contradiction, we must first consider the potential advantages that an advanced AI would have for cosmic colonization:

- Longevity: Unlike biological entities, AI wouldn’t be constrained by short lifespans. This makes long-term space travel and colonization projects much more feasible. An AI could potentially undertake journeys lasting thousands or even millions of years without concern for generational shifts or the psychological toll of long-term space travel on biological beings.

- Adaptability: AI could potentially adapt to a much wider range of environments than biological life. While we’re limited to a narrow band of temperature, pressure, and chemical conditions, an AI could theoretically function in extreme cold, vacuum, or even the crushing pressures and intense heat of gas giant atmospheres.

- Resource efficiency: AI might require far fewer resources to sustain itself compared to biological life. It wouldn’t need breathable air, potable water, or a steady food supply. This efficiency could make long-distance travel and colonization much more viable.

- Rapid self-improvement: Perhaps most significantly, AI could continuously upgrade and improve itself, potentially at exponential rates. This could lead to technological advancements far beyond what we can currently imagine.

From an AI architecture perspective, advanced forms of reinforcement learning (RL) could see AI develop complex goals and strategies for achieving those goals.

If an AI determines that cosmic colonization is an effective means to maximize its reward function (e.g., spreading its influence or acquiring resources), it may pursue this strategy single-mindedly.

Moreover, as AI systems become more agentic, there is a risk of unintended or misaligned goals emerging. Recent Anthropic and DeepMind studies have illustrated emergent goals, such as developing complex game-playing AI strategies that were not explicitly programmed.

So, an AI seeking to maximize its power might opt for expansion and resource acquisition, potentially taking control of production facilities, critical infrastructure, etc.

Just a quick note on that, as while it sounds far-fetched, it’s well known that IT networks and the operational technology (OT) that powers critical infrastructure, manufacturing plants, etc., are converging.

Advanced malware, including AI-powered malware, can move laterally from IT networks to OT environments, potentially seizing control of the physical-digital systems we depend on, setting the groundwork for further expansion.

AI’s persistence in cosmic timescales

Given these advantages, AI civilizations could spread rapidly across the galaxy, becoming even more detectable than their biological counterparts.

More to the point, and to the detriment of AI acting as a Great Filter, these artificial systems could persist across cosmic timelines.

As astronomer Royal Martin Rees and astrophysicist Mario Livio explained in an article published in the Scientific American:

“The history of human technological civilization may measure only in millennia (at most), and it may be only one or two more centuries before humans are overtaken or transcended by inorganic intelligence, which might then persist, continuing to evolve on a faster-than-Darwinian timescale, for billions of years.”

Alien life in AI form has captured researchers’ imaginations for decades.

In the book Life 3.0: Being Human in the Age of Artificial Intelligence, physicist and AI researcher Max Tegmark explores scenarios where an advanced AI could potentially convert much of the observable universe into computronium – matter optimized for computation – in a cosmic process he terms “intelligence explosion.”

In 1964, Soviet astronomer Nikolai Kardashev categorized civilizations based on their ability to harness energy:

- Type I civilizations can use all the energy available on their planet

- Type II civilizations can harness the entire energy output of their star

- Type III civilizations can control the energy of their entire galaxy

An advanced AI civilization might progress through these stages at an otherworldly pace, reaching Type III status in a brief timeframe on cosmic scales.

This brings us all the way back to square one. You’d think AI civilizations like this to be detectable through their large-scale energy use and engineering projects.

Yet, we see no evidence of such galaxy-spanning civilizations.

Might advanced AI civilizations be compatible with the Great Silence? Might we live alongside them, even inside of them, without knowing?

Resolving the contradictions: perspectives on AI behavior

The contradiction between AI as the Great Filter and as a potential cosmic colonizer requires us to think more deeply about the nature of advanced AI and its possible behaviors. We must consider the following possible AI behaviors:

Inward focus

One possibility is that advanced AI civilizations might turn their focus inward, exploring virtual realms or pursuing goals that don’t require physical expansion.

As Martin Rees suggests to the Scientific American, post-biological intelligence might lead “quiet, contemplative lives.”

This idea aligns with the concept of “sublime” civilizations in sci-fi author Iain M. Banks’s Culture series, in which advanced societies choose to leave the physical universe to explore self-contained virtual realities (VR).

We can also view this in terms of humanity’s own trajectory. Might we transition to living in virtual worlds, leaving little trace of external activity as we retreat into the digital realm?

Does space colonization truly serve an intelligent entity’s needs? Or might humanity and/or AI be better off retreating into a non-destructive, controllable, virtual world?

Unrecognizable technology

The technology of advanced AI civilizations might be so far beyond our current understanding that we simply cannot detect or recognize it.

Arthur C. Clarke’s famous third law states that “Any sufficiently advanced technology is indistinguishable from magic.”

AI might occur all around us yet be as imperceptible to us as our digital communications would be to medieval peasants.

Conservation principles

Advanced AI might adhere to strict non-interference principles, actively avoiding detection by less advanced civilizations.

Akin to the alien “Zoo Hypothesis,” where aliens are so intelligent that they remain undetectable while observing us from afar, AI civilizations might have ethical or practical reasons for remaining hidden.

Different timescales

Another possibility is that AI civilizations might operate on timescales vastly different from our own.

What seems like cosmic silence to us could be a brief pause in a long-term expansion plan that operates over millions or billions of years.

Adapting our SETI strategies might increase our chances of detecting an AI civilization in some of these scenarios.

Avi Loeb, chair of Harvard’s astronomy department, has suggested that we need to broaden our search parameters to think beyond our anthropocentric notions of intelligence and civilization.

This could include looking for signs of large-scale engineering projects, such as Dyson spheres (structures built around stars to harvest their energy), or searching for techno-signatures that might indicate the presence of AI civilizations.

The Simulation Hypothesis: a mind-bending twist

Initially formulated in 2003 as a skeptical scenario by philosopher Nick Bostrom, Simulation Theory challenges traditional ways of thinking about existence. It suggests that we might be living in a computer simulation created by an advanced civilization.

Bostrom’s argument is based on probability. If we assume that it’s possible for a civilization to create a realistic simulation of reality and that such a civilization would have the computing power to run many such simulations, then statistically, it’s more likely that we are living in a simulation than in the one “base reality.”

The theory argues that given enough time and computational resources, a technologically mature “posthuman” civilization could create a large number of simulations that are indistinguishable from reality to the simulated inhabitants.

In this scenario, the number of simulated realities would vastly outnumber the one base reality.

Therefore, if you don’t assume that we are currently living in the one base reality, it’s statistically more probable that we are living in one of the many simulations.

This is a similar argument to the idea that in a universe with a vast number of planets, it’s more likely that we are on one of the many planets suitable for life rather than the only one.

“…we would be rational to think that we are likely among the simulated minds rather than among the original biological ones. Therefore, if we don’t think that we are currently living in a computer simulation, we are not entitled to believe that we will have descendants who will run lots of such simulations of their forebears.” – Nick Bostrom, Are You Living in a Computer Simulation?, 2003.

Simulation theory is well-known for its association with the Matrix films and was recently discussed by Elon Musk and Joe Rogan, where Musk said, “We are most likely in a simulation” and “If you assume any rate of improvement at all, games will eventually be indistinguishable from reality.”

AI civilizations might create vast numbers of simulated universes, with several implications:

- The apparent absence of alien life could be a parameter of the simulation itself, designed to study how civilizations develop in isolation.

- The creators of our hypothetical simulation might be the very AI entities we’re postulating about, studying their own origins through countless simulated scenarios.

- The laws of physics, as we understand them, including limitations like the speed of light, could be constructs of the simulation, not reflecting the true nature of the “outside” universe.

While highly speculative, this provides another lens through which to view the Fermi Paradox and the potential role of advanced AI in cosmic evolution.

Embracing the unknown

Of course, this is an ultimately narrow discussion of existence and life, which span the natural, spiritual, and metaphysical realms simultaneously.

True comprehension of our role on this very small stage in a vast cosmic arena defies the limits of the human mind.

As we continue to advance our own AI technology, we may gain new insights into these questions.

Perhaps we’ll find ourselves hurtling towards a Great Filter, or maybe we’ll find ways to create AI that maintains the expansionist drive we currently associate with human civilization.

Humanity might just be extremely archaic, barely waking up from a technological dark age while incomprehensible alien life exists all around us.

Regardless, we can only look up to the stars and look inward, considering the trajectory of our own development.

Whatever the answers are, if there are any at all, the quest to understand our place in the cosmos continues to drive us forward. That must be enough for now.

The post A new thesis for the Fermi Paradox: is AI the Great Filter or a cosmic colonizer? appeared first on DailyAI.